Sensor Fusion Brings Smart Sensors to the IoT, Delivering Benefits for all Sectors

資料提供者:DigiKey 歐洲編輯群

2017-01-25

Sensing has always been an important part of electronic systems, from simple positive/negative temperature coefficient devices to more sophisticated optical-based sub-systems, rotary encoders and temperature sensors.

One of the most important aspects of using sensors in industrial, automotive and medical applications has been the Analog Front End (AFE), the conversion process responsible for taking a real-world attribute and accurately reproducing it in the digital domain. Along with the sensor itself, the AFE can define the performance of the overall system.

In the consumer sector and the wider IoT, large application-specific sensors have been displaced by small, highly integrated MEMS devices, and as a result, the AFE has been replaced by a serial bus. This puts much of the design burden on the microcontroller at the heart of the system, particularly in applications that make use of multiple sensors. This is the paradigm now helping to drive the IoT, but bringing multiple sensors together in a single device presents its own unique challenges.

Driving forces

The advent of MEMS-based sensors (Micro Electro-Mechanical Systems) represented a major change in electronic system design, but it wasn’t a change that happened overnight. The technology took many years to refine and cost-optimize, but in recent years it has benefited greatly from the smartphone sector.

Some industry analysts believe the near future for MEMS is less certain than its recent past, as margins are squeezed and demand levels off somewhat. However, the continued rise of wearable technology and the inexorable growth in the IoT will likely secure MEMS technology as a leading and enabling technology for some time to come. Established markets including industrial, medical and automotive are also enthusiastic adopters of the technology, with an estimated 20 MEMS sensors per car being the average today.

In medical applications, microfluidic sensors are beginning to emerge in implantable medical devices. These kinds of applications will present manufacturers with the opportunities they need to continue investing in the technology, which will ultimately benefit other markets.

Today, the IoT is perhaps the biggest opportunity, which will see everyday items, devices and services becoming ‘smarter’ through the addition of sensors able to measure almost every conceivable parameter. This includes pressure, temperature, and inertia such as acceleration, orientation, location and general motion.

Capturing one of these parameters can add significant functionality to a device, but incorporating many of them results in functionality greater than the sum of its parts. It is this ‘sensor fusion’ that will help drive new applications, will form part of the IoT, and subsequently help feed into the phenomena known as Big Data. Sensor fusion is the term being applied to bringing wider context to sensor input, such as combining pitch and roll with geographic location, or acceleration with elapsed time, or temperature with altitude. It is, effectively, the process of inferring additional data beyond that being measured, in a way that could almost be ascribed to intelligence.

Adding intelligence

The apparent intelligence needed in a sensor fusion solution is inevitably achieved through software. This is commonly provided by a library running on a core capable of delivering the processing performance needed for the application. In many applications, measuring physical parameters that change relatively slowly can often be achieved by a 32-bit or even a 16-bit microcontroller.

Some manufacturers have developed fully integrated sensor hubs, such as the BHA250 from Bosch Sensortec. This innovative device integrates a three-axis accelerometer with software algorithms for motion detection, which run on the integrated programmable ‘Fuser Core’ microcontroller. On its own, the BHA250 can be used in wearable devices to detect various forms of activity, but as shown in Figure 1, this can be further extended through additional sensors connected over I2C to address the needs of other applications.

Figure 1: Bosch Sensortec’s BHA250 sensor hub offers a fully integrated accelerometer and the ability to interface to additional external sensors.

The Fuser Core processes ‘raw’ sensor data received over the I2C interface, which gets passed to a host processor via a register map. In this scenario, the host processor may be running additional software, such as Bosch Sensortec’s FusionLib software, a 9-axis solution that combines measurements from an accelerometer, gyroscope and geomagnetic sensor.

This gives a good example of how sensor fusion can be used to build up a much more detailed representation of the real world in the digital domain. With more sensors being introduced all the time, this will extend beyond inertial sensors, to include environmental and optical technologies.

While it may be possible to address this opportunity using application specific devices such as the BHA250, it is also likely — at least in the near term — that OEMs will turn to more general purpose microcontrollers (MCUs) to develop sensor fusion solutions. Today’s high-performance, low-power MCUs have the added benefit of offering additional peripherals, enabling the sensor hub to become the heart of the entire system.

Optimized for sensor fusion

Due to their nature, general purpose MCUs integrate peripherals that make them applicable to a wide range of uses, however they generally have to balance price, performance, power, and peripherals in a way that will ultimately limit their use to fairly specific areas. For this reason, MCUs optimized for specific end-applications are still common. This is particularly true for ultra-low-power applications and, more recently, sensor fusion. One of the most recent additions to this class of device is the Kinetis KL28Z from NXP Semiconductors.

Figure 2: Block diagram of the Kinetis KL28Z, an MCU optimized for sensor fusion applications.

It is based on the ARM® Cortex®-M0+ core, designed to offer the lowest power possible for a given performance, and lists sensor hubs among its target applications. As shown in Figure 2, it integrates a number of analog features alongside a wide range of digital interfaces, including a FlexIO module that can emulate a wide range of standard interfaces. Important for IoT applications, the device also integrates a number of security features, including crypto acceleration supporting AES/DES/3DES/MD5/SHA and true number generation. It also offers three low-power SPI modules, three low-power UART modules, and three low-power I2C modules supporting speeds of up to 5 Mbit/s.

Software is becoming a more important part of the total solution for sensor fusion, which is why the Kinetis family is supported by the Intelligent Sensing Framework (ISF). Its core functions are shown in Figure 3. As well as supporting the Kinetis family, the framework also supports a range of Freescale sensors including accelerometers (including the FXLC95000CL family), gyroscopes, magnetometers (such as the FXOS8700) and pressure sensors, as well as analog output sensors, and the MMA955xL NXP intelligent pedometer sensor.

Figure 3: The ISF Core Services provide sensor abstraction and protocol adapters.

Providing connectivity

As it applies to the IoT, sensing is inherently ‘wireless’ in that the majority of sensors employed are intended to sense ambient conditions unobtrusively. This naturally lends itself to a total system solution that is also wireless. It’s no surprise that many of the IoT sensor nodes being developed will also use some form of local, personal or wide area wireless networking.

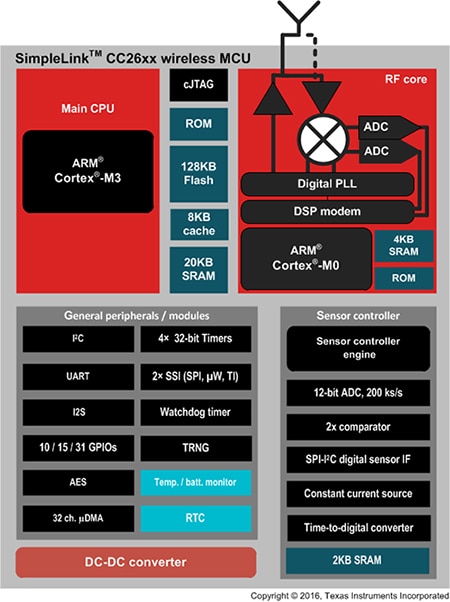

It also follows that an MCU that can provide both sensor fusion and wireless connectivity will have a place in the IoT. This is exactly the case for the CC2650 family of wireless MCUs from Texas Instruments. As well as an ARM Cortex-M3 core, the CC2650 wireless MCU integrates a 2.4 GHz RF block that allows it to communicate using Bluetooth, ZigBee, 6LowPAN, and ZigBee RF4CE. It achieves this by embedding the IEEE 802.15.4 MAC in ROM and running it, in part, on a separate but integrated ARM Cortex-M0 core. The Bluetooth and ZigBee stacks are made available free of charge from TI.

The CC2650 is featured in the CC2650MODA module, and makes use of the MCU’s integrated ultra-low power sensor controller, which is able to run autonomously, thereby preserving system power during normal operation and periods of inactivity (see Figure 4).

This may include monitoring analog sensors using the integrated ADC, monitoring digital sensors through GPIO, I2C and SPI, capacitive sensing (such as wakeup on approach), keyboard scanning or pulse counting. A low-power clocked comparator is included, which is able to wake the device from any state. Each of the functions in the sensor controller can be enabled or disabled selectively.

Figure 4: TI’s CC2650 wireless MCUs provide 2.4 GHz wireless connectivity and a dedicated, autonomous sensor controller.

Figure 5: The CC2650MODA module.

Conclusion

Sensors are emerging that will provide greater detail about our environment and the things in it. When combined with capable MCUs these sensors can become ‘smarter’, and when multiple smart sensors are brought together in a single application they become greater than the sum of their parts.

Sensor fusion will bring smart sensors to the IoT, which in turn will deliver Big Data to the infrastructure. This will fundamentally change the way we make, grow, transport, and consume just about everything.

Semiconductor manufacturers are stepping up to the challenge this presents by developing optimally-balanced MCUs that deliver the right mix of performance and low-power operation. Sensor manufacturers continue to push the physical boundaries of what is possible, which will ensure that sensor fusion will continue to evolve, enabling applications that are as of yet considered. Every sector will benefit from this fundamental change in the way we interface to our world.

聲明:各作者及/或論壇參與者於本網站所發表之意見、理念和觀點,概不反映 DigiKey 的意見、理念和觀點,亦非 DigiKey 的正式原則。