Use FPGAs to Build High-Performance Embedded Vision Applications with Machine Learning

資料提供者:DigiKey 北美編輯群

2018-09-06

The rapid growth of available data from cameras and other devices has driven the use of machine learning to extract more useful information from images in automotive, security, and other applications. Specialized devices promise high-performance machine learning (ML) inference in embedded vision applications. Yet, many of these devices are in the very early stages of development as their designers work to find the most effective algorithms even as artificial intelligence (AI) researchers rapidly evolve new methods.

Right now, developers use available FPGA-based platforms for ML to create embedded vision systems able to meet increased performance requirements. At the same time, they can retain the flexibility needed to keep pace with advances in machine learning.

This article will describe the requirements of ML processing and why FPGAs solve many of the performance issues. It will then introduce a suitable FPGA-based ML platform and how to use it.

Machine learning algorithms and inference engines

Among ML algorithms, convolutional neural networks (CNNs) have become the preferred solution for image classification. Their ability to achieve high accuracy rates on image recognition has propelled their use in a wide range of applications across platforms as varied as smartphones, security systems, and automotive driver assist systems. A type of deep neural network (DNN), CNNs use a neural network architecture comprising specialized layers that extract features from an image and use those features to classify images during training on labeled images (see “Get Started with Machine Learning Using Readily Available Hardware and Software”).

CNN developers typically perform training on high-performance systems or cloud platforms, using graphics processing units (GPUs) to speed the massive number of matrix calculations required to train models on labeled image data sets often numbering in the millions. After training, the trained model is used in applications for inference, classifying new images or frames from video streams. Deployed for inference, a trained model still needs to perform those same matrix calculations, but because the input volume is so much less, developers can use CNNs for modest machine learning applications running on general purpose hardware (see "Build a Machine Learning Application with a Raspberry Pi").

For many applications, however, general purpose platforms lack the performance needed to achieve both high accuracy and performance in CNN inference. Optimization techniques and alternative CNN architectures such as MobileNet or SqueezeNet help reduce platform requirements, but typically at a cost in accuracy and inference latency that can conflict with application requirements.

At the same time, the rapidly evolving algorithm landscape complicates efforts to design machine learning ICs that are sufficiently specialized to accelerate inference and yet sufficiently generalized to support new algorithms. FPGAs have for years served this specific role, providing a combination of performance and flexibility needed to accelerate critical algorithms when general purpose processors are insufficient or specialized devices are unavailable.

FPGA as machine learning platforms

For machine learning, GPUs remain the benchmark – one that earlier FPGAs simply could not approach. More recent devices such as the Intel Arria 10 GX FPGA and Lattice Semiconductor ECP5 FPGA have significantly narrowed the gap between advanced FPGAs and GPUs. For some DNN architectures using compact integer data types, this class of FPGA can deliver even greater performance/watt over a mainstream GPU.

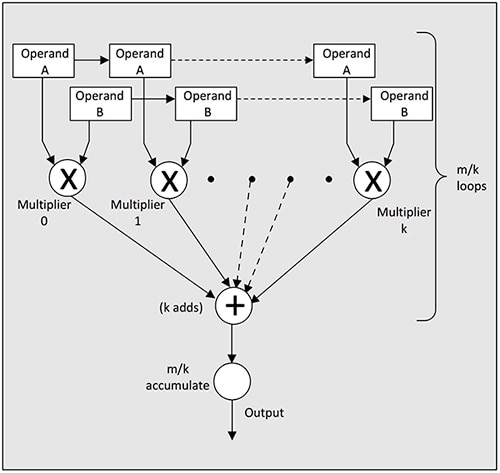

Advanced FPGAs achieve high performance on general matrix multiply (GEMM) operations thanks to their combination of embedded memory and digital signal processing (DSP) resources. Their ability to place embedded memory close to the calculation engines reduces the CPU memory bottleneck that can restrict performance of machine learning algorithms on general purpose processors. In turn, the embedded DSP calculation engines on FPGAs provide a greater number of parallel multiplier resources than available even on typical DSP devices (Figure 1). FPGA vendors take full advantage of these characteristics in delivering FPGA development platforms specifically for machine learning.

Figure 1: Advanced FPGAs such as the Lattice Semiconductor ECP5 provide the combination of parallel processing resources and embedded memory needed to achieve high-performance inference. (Image source: Lattice Semiconductor)

For example, Intel's recently available OPENVINO™ with FPGA support extends that platform's ability to deploy inference models to different device types including GPUs, CPUs, and FPGAs. Here, developers work with Intel's deep learning inference engine workflow, which combines Intel's Deep Learning Deployment Toolkit and Intel Computer Vision software development kit (SDK) provided in the Intel OPENVINO toolkit. Developers use the SDK's application programming interface (API) to create a model that can be targeted to those different hardware platforms using Intel's run model optimizer.

Designed to work with the Intel DK-DEV-10AX115S-A Arria 10 GX FPGA development kit, the Deep Learning Deployment Toolkit lets developers import trained models from leading ML frameworks, including Caffe and TensorFlow (Figure 2). Within the toolkit, the Model Optimizer and Inference Engine handle model conversion and deployment, respectively, on a target platform such as the Arria 10 GX FPGA development kit or a custom design using an Arria 10 GX FPGA device.

Figure 2: The Intel OPENVINO toolkit with FPGA support provides a full tool chain required to deploy models trained on Caffe, TensorFlow, and other frameworks on its Arria 10 GX FPGA development kit or custom designs built around the Arria 10 GX FPGA. (Image source: Intel)

To migrate a pretrained model, developers use the Python-based Model Optimizer to generate an intermediate representation (IR), which is contained in an xml file that provides the network topology and a bin file that provides the model parameters as binary values. Besides producing the IR, the Model Optimizer performs a critical function in removing layers that are required in the model for training but serve no purpose for inference. In addition, the tool merges layers that each provide separate math operations into a single combined layer when possible.

The result of this network pruning and merging results in a more compact model, which in turn results in faster inference time and reduced memory requirements on the target platform.

The Intel Inference Engine is a C++ library that uses a set of C++ classes that are common across the supported target hardware platform to enable inference on that platform. For their inference application, developers use classes such as CNNNetReader to read the CNN topology contained in the xml file (ReadNetwork) and the model parameters contained in the bin file (ReadWeights). After model loading finishes, a call to class method Infer() performs a blocking inference, while a call to class method StartAsync() performs inference asynchronously using a wait or completion routine to handle the results when inference finishes.

Intel demonstrates the complete workflow and detailed Inference Engine API calls in a number of sample applications available in the OPENVINO environment. For example, a security barrier camera sample application illustrates use of a pipeline of inference models to first identify a vehicle bounding box (Figure 3). The next model in the pipeline examines the contents of that bounding box to identify vehicle attributes including vehicle class, color and license plate location.

Figure 3: Intel's security barrier camera sample application demonstrates use of an inference pipeline to identify a vehicle (green bounding box), identify vehicle attributes include color, type, and license plate location (red box), and finally identify license plate characters (red text). (Image source: Intel Corp.)

The final model in the pipeline uses those vehicle attributes to extract the characters from the license plate. To use the model for inference, the sample code shows use of the Inference Model C++ library to create an object (LPR), which is an instance of a structure called LPRDetection. This structure uses Inference Engine API class objects to read (CNNNetReader) and validate model inputs and outputs (Listing 1).

Copy

CNNNetwork read() override {

std::cout << "[ INFO ] Loading network files for Licence Plate Recognition (LPR)" << std::endl;

CNNNetReader netReader;

/** Read network model **/

netReader.ReadNetwork(FLAGS_m_lpr);

std::cout << "[ INFO ] Batch size is forced to 1 for LPR Network" << std::endl;

netReader.getNetwork().setBatchSize(1);

/** Extract model name and load it's weights **/

std::string binFileName = fileNameNoExt(FLAGS_m_lpr) + ".bin";

netReader.ReadWeights(binFileName);

/** LPR network should have 2 inputs (and second is just a stub) and one output **/

// ---------------------------Check inputs

std::cout << "[ INFO ] Checking LPR Network inputs" << std::endl;

InputsDataMap inputInfo(netReader.getNetwork().getInputsInfo());

if (inputInfo.size() != 2) {

throw std::logic_error("LPR should have 2 inputs");

}

InputInfo::Ptr& inputInfoFirst = inputInfo.begin()->second;

inputInfoFirst->setInputPrecision(Precision::U8);

inputInfoFirst->getInputData()->setLayout(Layout::NCHW);

inputImageName = inputInfo.begin()->first;

auto sequenceInput = (++inputInfo.begin());

inputSeqName = sequenceInput->first;

if (sequenceInput->second->getTensorDesc().getDims()[0] != maxSequenceSizePerPlate) {

throw std::logic_error("LPR post-processing assumes certain maximum sequences");

}

// ---------------------------Check outputs

std::cout << "[ INFO ] Checking LPR Network outputs" << std::endl;

OutputsDataMap outputInfo(netReader.getNetwork().getOutputsInfo());

if (outputInfo.size() != 1) {

throw std::logic_error("LPR should have 1 output");

}

outputName = outputInfo.begin()->first;

std::cout << "[ INFO ] Loading LPR model to the "<< FLAGS_d_lpr << " plugin" << std::endl;

_enabled = true;

return netReader.getNetwork();

}

Listing 1: Provided in the Intel security barrier camera sample application in the OPENVINO toolkit, this snippet demonstrates the design pattern for using the Intel Inference Engine C++ library API to read a model and its parameters into the inference engine. (Code source: Intel)

To perform inference, the code loads data and calls the submitRequest method, which initiates the inference cycle and waits for the result before displaying the identified license plate characters (Listing 2).

Copy

if (LPR.enabled()) { // licence plate

// expanding a bounding box a bit, better for the license plate recognition

result.location.x -= 5;

result.location.y -= 5;

result.location.width += 10;

result.location.height += 10;

auto clippedRect = result.location & cv::Rect(0, 0, width, height);

cv::Mat Plate = frame(clippedRect);

// ----------------------------Run License Plate Recognition

LPR.enqueue(Plate);

t0 = std::chrono::high_resolution_clock::now();

LPR.submitRequest();

LPR.wait();

t1 = std::chrono::high_resolution_clock::now();

LPRNetworktime += std::chrono::duration_cast<ms>(t1 - t0);

LPRInferred++;

// ----------------------------Process outputs

cv::putText(frame,

LPR.GetLicencePlateText(),

cv::Point2f(result.location.x, result.location.y + result.location.height + 15),

cv::FONT_HERSHEY_COMPLEX_SMALL,

0.8,

cv::Scalar(0, 0, 255));

if (FLAGS_r) {

std::cout << "License Plate Recognition results:" << LPR.GetLicencePlateText() << std::endl;

}

}

cv::rectangle(frame, result.location, cv::Scalar(0, 0, 255), 2);

}

Listing 2: This snippet from the Intel security barrier camera sample application in the OPENVINO toolkit illustrates the design pattern for loading a model, performing inference, and providing the results. (Code source: Intel)

Integrated embedded vision platform

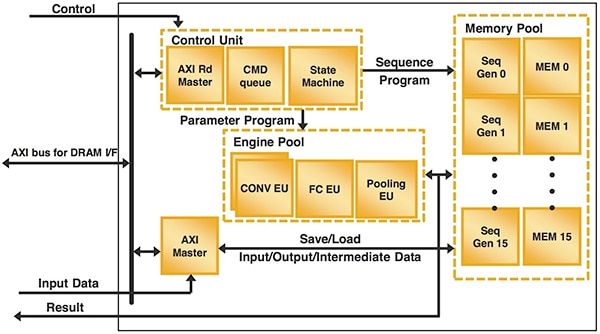

While Intel's OPENVINO approach emphasizes platform retargeting, Lattice focuses strictly on FPGA inference with its SensAI platform. Among its features, the SensAI platform provides FPGA IP for DNN architectures including CNNs and a compact architecture called a binarized neural network (BNN). For embedded vision, the SensAI CNN IP provides the framework for a complete inference engine, combining interfaces for a control subsystem, memory, input, and output with resources that implement different model layer types including convolution, BatchNorm normalization, ReLu activation, Pooling, and others (Figure 4).

Figure 4: The Lattice Semiconductor CNN IP implements the complete framework for an inference system, combining specialized engines and interfaces for control, memory, input, and output. (Image source: Lattice Semiconductor)

To implement a CNN model, developers begin by configuring the CNN using Lattice's Clarity configuration tool in the Lattice Diamond design environment for ECP5 FPGAs or Radiant for other Lattice FPGA families. Here, the developer can specify the model type (CNN or BNN), number of convolution engines (eight max), and internal storage size (up to 16 Kbytes) for each layer, or blob. After the CNN is configured, developers use the design environment to generate the core as an FPGA bitstream.

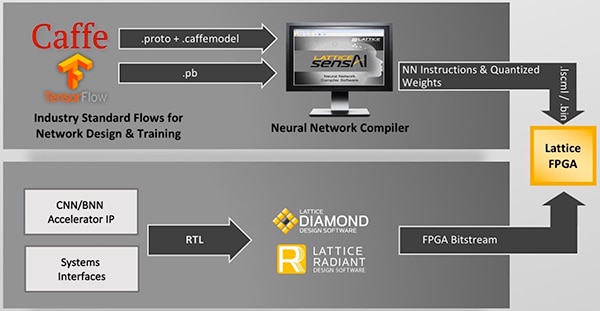

Separately, developers import trained models developed with Caffe or TensorFlow into the SensAI platform. Here, the Lattice Neural Network Compiler converts the trained Caffe or TensorFlow models into a set of files containing the neural network model parameters and execution command sequences. The SensAI platform brings the separate outputs from the design environment and from the compiler together in the FPGA to provide a final inference model (Figure 5).

Figure 5: The Lattice Semiconductor SensAI platform combines its CNN and BNN IP with its Neural Network Compiler, enabling developers to convert Caffe or TensorFlow models to run as inference engines on Lattice FPGAs. (Image source: Lattice Semiconductor)

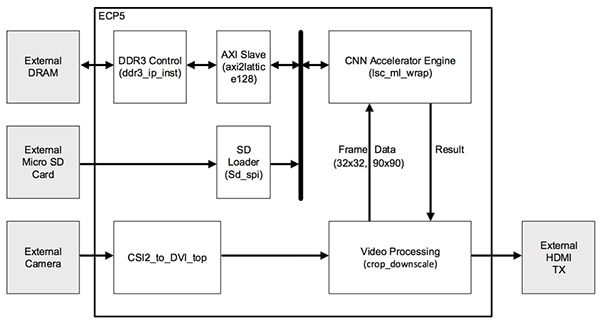

For embedded vision applications, the Lattice LF-EVDK1-EVN embedded vision development kit (EVDK) offers an ideal target platform for running CNN model inference. The EVDK provides a complete video platform in an 80 x 80 mm three board stack that includes Lattice's CrossLink video input board, a processor board with an ECP5 FPGA, and an HDMI output board. Developers can use the EVDK as the target platform for a number of sample CNN applications available from Lattice. For example, the Lattice speed-sign detection reference design works with the EVDK to illustrate application of the SensAI CNN IP in a typical automotive application (Figure 6).

Figure 6: The Lattice Semiconductor speed-sign detection reference design uses the SensAI platform and Lattice LF_EVDK1-EVN embedded vision development kit to provide a complete inference application that developers can operate immediately or explore in detail. (Image source: Lattice Semiconductor)

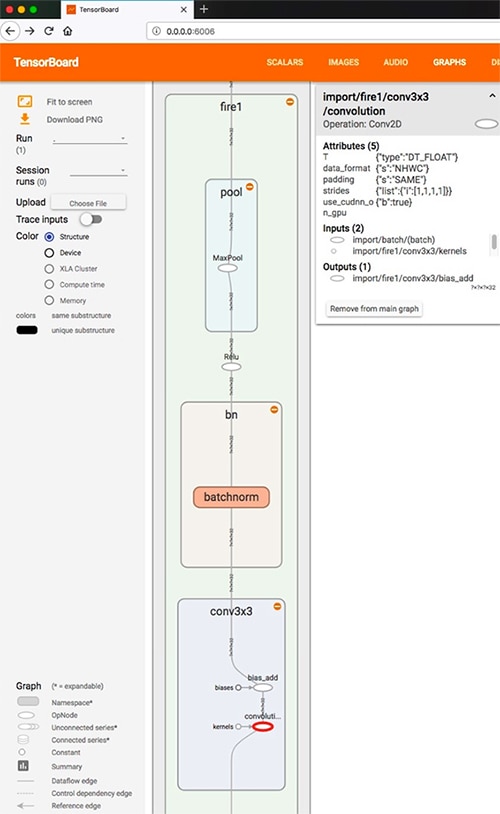

The project files for this sample application include the complete set of files starting with the models provided in Caffe caffemodel and TensorFlow pb formats. As a result, developers can explore the details of these models. For example, using the TensorFlow import_pb_to_tensorboard.py utility, developers can import the Lattice-provided pb model to examine the details of the CNN used in this sample application (Figure 7). In this case, the provided model is a sequence of four "fire" modules, each comprising:

- A Conv2D layer, which performs the 3 x 3 convolution to extract features from the input stream

- An activation layer that performs BatchNorm normalization followed by rectified linear unit (ReLU) activation

- A MaxPool pooling layer, which samples the output from the previous layer

Figure 7: The Lattice speed-sign detection sample application includes TensorFlow pb models that developers can import into TensorBoard for detailed examination. Note: Data flows upward through the layers in this diagram. (Image source: DigiKey)

Developers can work through the model process flow described earlier, using the SensAI platform to generate the model files. Alternatively, developers can jump directly to deployment, using the provided files. In either case, the files are loaded into the EVDK through a microSD card connected through an adaptor.

In operation, the camera on the EVDK provides a video stream to the ECP5 FPGA, where the configured CNN accelerator IP executes the command sequences to perform inference. As with any inference engine, every output channel produces a result that provides the probability that the label associated with that output channel is the corrected label for the input image. In this case, the model was trained with labeled images of speed limit signs for 25, 30, 35, 40, 45, 50, 55, 60 and 65 miles per hour. Consequently, when the model detects a speed limit sign anywhere in its input field, it displays the probability that the detected sign corresponds to a speed limit of 25, 30, 35, 40, 45, 50, 55, 60 or 65 miles per hour (Figure 8).

Figure 8: Running on the Lattice EVDK, the Lattice speed-sign detection demo performs inference on the video input stream, generating output values that indicate the likelihood that the captured image corresponds to the label associated with that particular output. In this case it shows that the speed limit sign most likely indicates 25 mph. (Image source: Lattice Semiconductor)

Conclusion

To employ machine learning in embedded vision applications, developers have been limited in their ability to achieve required performance levels using available hardware platforms. With the emergence of high-performance FPGAs, however, developers can create inference engines able to approach the performance of GPUs. Using machine learning FPGA platforms designed for embedded vision, developers can focus on their specific requirements, using standard machine learning frameworks to train models and rely on the FPGA platform to deliver high-performance inference.

聲明:各作者及/或論壇參與者於本網站所發表之意見、理念和觀點,概不反映 DigiKey 的意見、理念和觀點,亦非 DigiKey 的正式原則。