How Cold is It? Measuring Cryogenic Temperatures is a Very Different World

Most general application temperature measurements occur within a fairly limited range between water freezing and boiling (0°C to 100°C), although there are certainly many situations which extend beyond both these levels. Fortunately, low-cost, easy-to-use solid state sensors are available which are specified for -50°C to +125°C, and special ones are available with a wider range. Further, thermocouples, resistance temperature detectors (RTDs), and even thermistors can handle much wider ranges.

For example, the PTCSL03T091DT1E thermistor from Vishay Components is rated for -40°C (277 K) to +165°C (438 K), while the TE Connectivity Measurement Specialties R-10318-69 Type-T thermocouple covers the wider -200°C (73 K) to + 350°C (623 K) range. Generally, finding a sensor for these measurements is not the problem; instead, it’s actually applying the sensor that brings challenges.

When things get really hot, extending into thousands of degrees, the sensors options are more limited. Usually it becomes a choice between thermocouples of various types or an infrared sensing arrangement. Since the source being measured is at a high temperature, it has a lot of energy which needs to be captured by the sensor with minimal impact on that source.

But what about measuring those really, really low temperatures, those that are in the low double-digits (a few tens of K), single-digit (1 to 9 K), or even sub single-digit (<1 K) regions? There’s even research being done down at 0.01 K, and a recent article in IEEE Spectrum, “Quantum Computing: Atomic Clocks Make for Longer-Lasting Qubits“ discussed research being done below 100 nK. (How to get down that low is another fascinating story!) But how do you know exactly where you are when you’re down there? Accurate, credible measurements of these cryogenic temperatures are a very strange world, for several reasons:

- First, while the laws of physics are obviously still in force, the materials go into major transitions and their characteristics and behaviors change radically. Sensor performance, linearity, and other critical attributes shift dramatically in the low K regions. While we’re comfortable with water turning to ice or to steam, the changes in the low K region are much harder to grasp.

- Second, the measurement approach is usually intertwined with methods being used to reach those temperatures. For example, multi-Tesla magnetic fields are often a major part of a supercooling arrangement (how and why is another story), and those fields have a major impact on the sensing arrangement and its components.

- Third, projects at deep cryogenic temperatures often involve extremely small amounts of mass; in some cases, it can be as little a few atoms or molecules. So, you have a double problem: molecules with low energy, and only a few of them. You obviously can’t attach a sensor and even if you could, the sensor would seriously affect the substance being measured. In many ways, it’s a corollary of Heisenberg's Uncertainty Principle of quantum physics, in which the act of making a measurement affects that which is being measured.

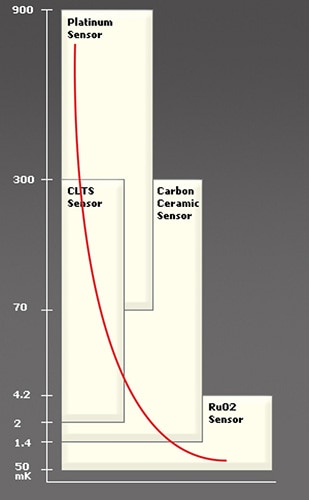

Figure 1: Various materials can be used to surprisingly low K values; note that the vertical scale is not linear. CLTS is a cryogenic linear temperature sensor, a flat flexible sensor comprising manganin and nickel foil sensing grids, RuO2 is ruthenium oxide. (Image source: ICE Oxford Ltd.)

Figure 1: Various materials can be used to surprisingly low K values; note that the vertical scale is not linear. CLTS is a cryogenic linear temperature sensor, a flat flexible sensor comprising manganin and nickel foil sensing grids, RuO2 is ruthenium oxide. (Image source: ICE Oxford Ltd.)

Still, scientists and researchers need to make those measurements. They have a selection of choices depending on how low they are going and what they are measuring (solid mass, molecules in a gas-like cluster, or individual molecules), and there’s a lot of research and many practical applications near 0 K. Relatively speaking, those dealing with liquid oxygen (90 K, −183°C) and hydrogen (20 K, −253°C) for rocket fuel have it easy, as do those working with nitrogen (77 K, −196°C). In contrast, liquid helium is much harder to assess, at about 4 K (−269 °C), yet is it used to cool the magnets of MRI machines down to their superconducting regions.

The key to making temperature measurements is to always keep in mind that what we call “temperature” is really a measure of the energy of whatever is being measured. As with nearly all temperature measurements, users must first consider three specifications: what range they need to cover, what absolute accuracy they need, and with what precision (resolution). Then they need to assess the impact their measurement arrangement will have at these temperatures.

Somewhat surprisingly, some sensors which are common at more “normal” temperatures can even work down into the high single-digit region (Figure 1). Among the options are RTDs (using platinum or rhodium-iron), germanium, and even classic carbon-based resistors. However, the intense magnetic fields of these setups can induce sensor errors of a few K. The research reality is that there is so much demand for low K sensing that these transducers are standard catalog items available from many vendors (which is fairly amazing when you think about it).

More complex options include use of Brillouin scattering in optical fibers and other sophisticated optical techniques. Yet even the “humble” capacitor can be used in a bridge arrangement with its physical dimensions and shape – and thus its capacitance – changing in a known relationship as a carefully modeled function of temperature.

But these techniques will not work for gauging the temperature of small numbers of molecules. In those situations, some very esoteric approaches are needed. One arrangement sweeps an intense magnetic field with a precision gradient around the trapped target, then observes how its molecules distribute along that field, indicating their energy, and thus, temperature. Another scheme uses lasers to push the molecules, where the amount of laser energy versus resultant motion indicates the target energy. These and other complex methods are not only difficult to set up, but they require numerous corrections and compensations for second and third order subtleties of their physics, as well as system imperfections.

So, the next time you feel the need to complain about how hard your temperature measurement scenario is, just think about those working in the low K region, down to and even below 1 K. It’s a spooky world down there, and any researchers must also ask and answer the eternal instrumentation question, “How do I calibrate, confirm, and validate my readings?” That’s almost nightmare stuff!

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum