SLAM: How Robots Navigate the Unknown Terrain

Imagine being stranded in an unfamiliar desert, facing the daunting task of finding your way to safety. Navigating through unfamiliar terrain has long been a challenge for both humans and robots alike. Traditional navigation methods for a robot or autonomous vehicle require a pre-existing map, but in uncharted territories, obtaining such a map is impossible without traversing the area. This presents a classic dilemma in robotics, often referred to as the "chicken and egg" problem. How can a robot navigate an unknown environment without a map, and how can it create a map without first navigating the environment?

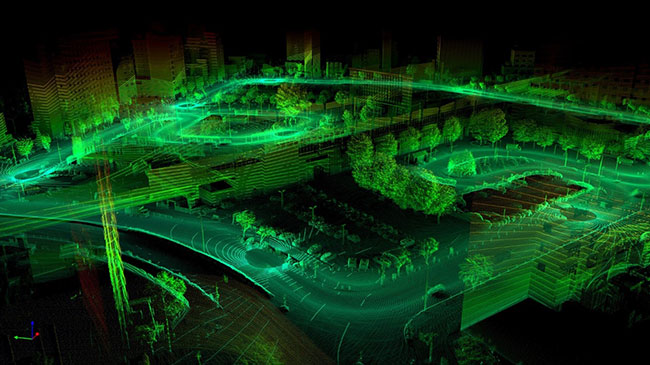

3D visual SLAM map’ or ‘SLAM map created using visual SLAM. (Figure reproduced from www.flyability.comImage source: Sigmoidal.ai)

3D visual SLAM map’ or ‘SLAM map created using visual SLAM. (Figure reproduced from www.flyability.comImage source: Sigmoidal.ai)

This is where the concept of Simultaneous Localization and Mapping (SLAM) comes into play. Developed by researchers such as Hugh Durrant-Whyte and John J. Leonard, SLAM is a technique that allows robots to autonomously navigate and map unknown environments in real time. Rather than relying on pre-existing maps, SLAM enables robots to create maps of their surroundings while simultaneously determining their own position within those maps. At its core, SLAM involves two main processes: mapping and localization. Mapping refers to the creation of a spatial representation of the environment, while localization involves determining the robot's position within that map. These processes are intertwined, with the robot continuously updating its map based on sensor data and adjusting its position estimate accordingly.

The implementation of SLAM involves several key steps, each of which plays a crucial role in the overall process. These steps include landmark extraction, data association, state estimation, and updates. Landmark extraction involves identifying distinct features or landmarks in the environment that can be used as reference points for mapping and localization. Data association involves matching sensor measurements to features in the map, while state estimation involves estimating the robot's position and orientation based on sensor data. Finally, updates involve refining the map and position estimates based on new sensor measurements.

One of the key factors that determine the effectiveness of SLAM is the type of sensors used. Different sensors offer varying levels of accuracy and information, which can impact the quality of the resulting map and localization estimates. For example, Visual SLAM (vSLAM) utilizes cameras as the primary sensor, allowing the robot to extract visual information from its surroundings. This visual information can include features, such as edges, corners, and textures, which can be used as landmarks for mapping and localization. Additionally, cameras provide rich semantic information that can aid in tasks, such as object detection and recognition. On the other hand, LiDAR-based SLAM utilizes LiDAR sensors (such as the SLAMTEC SEN-15870 from SparkFun), which emit laser beams to measure distances to objects in the environment. LiDAR sensors offer high accuracy and precision, making them well-suited for mapping environments with complex geometry. However, LiDAR sensors can be expensive and computationally intensive, which can limit their applicability in certain scenarios.

There are several subcategories for vSLAM based on the type of camera used. These include Monocular SLAM, Stereo SLAM, and RGB-D SLAM. Monocular SLAM uses a single camera to estimate the robot's motion and the structure of the environment. Stereo SLAM utilizes a stereo camera setup, which consists of two cameras placed at a known baseline distance from each other. This setup allows for the triangulation of visual features, resulting in improved depth estimation and mapping accuracy. Finally, RGB-D SLAM combines a traditional RGB camera with a depth sensor, such as a Microsoft Kinect or Intel RealSense camera. This additional depth information enables more accurate 3D mapping and localization.

Each subcategory of vSLAM has its own set of advantages and limitations, depending on factors such as cost, computational complexity, and environmental conditions. Monocular SLAM, for example, is widely used due to its simplicity and low cost. However, it suffers from scale ambiguity, as it cannot directly estimate the scale of the environment. Stereo SLAM addresses this issue by leveraging the triangulation of visual features to estimate depth and scale. RGB-D SLAM, meanwhile, offers the highest level of accuracy and detail, thanks to its combination of RGB imagery and depth information.

In addition to its applications in robotics, SLAM has numerous real-world applications across various industries. In robotics, SLAM enables robots to autonomously navigate and explore dynamic environments, such as warehouses, factories, and disaster zones. In autonomous vehicles, SLAM is used to create high-definition maps of roadways and to localize the vehicle within those maps. SLAM also has applications in augmented reality (AR) and virtual reality (VR), where it is used to create immersive experiences by overlaying virtual objects onto real-world environments.

Despite its many advantages, SLAM is not without its drawbacks. One of the main challenges of SLAM is the computational complexity involved in processing sensor data and updating the map in real time. This can be particularly challenging in environments with large amounts of data or limited computational resources. Additionally, SLAM relies heavily on the availability of distinct features and landmarks in the environment. In environments with uniform or featureless terrain, SLAM may struggle to create accurate maps or localize the robot effectively.

In conclusion, Simultaneous Localization and Mapping (SLAM) is a powerful technique that enables robots to autonomously navigate and map unknown environments in real time. By combining mapping and localization into a single process, SLAM allows robots to explore and understand their surroundings without prior knowledge or pre-existing maps. While SLAM comes with its own set of challenges and limitations, its applications are vast and varied, spanning industries such as robotics, autonomous vehicles, AR, and VR. As technology continues to advance, SLAM is playing an increasingly important role in shaping the future of robotics and automation.

References:

- What is SLAM? (Simultaneous Localisation And Mapping) (vercator.com)

- Introduction to SLAM (Simultaneous Localization and Mapping) | Ouster

- Zero to SLAM in 30 minutes: an interactive workshop | Ouster

- What Is SLAM (Simultaneous Localization and Mapping) – MATLAB & Simulink - MATLAB & Simulink (mathworks.com)

- SLAM; definition and evolution - ScienceDirect

- An Introduction to Key Algorithms Used in SLAM - Technical Articles (control.com)

- Localization — Introduction to Robotics and Perception (roboticsbook.org)

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum