Machine Learning Novices and Experts Have a Lot to Like About the STM32 Ecosystem

Machine learning, particularly TinyML, has the potential to revolutionize the way embedded systems are designed and built. Traditionally, these systems have procedural algorithms based on the developers' experience with the system. Machine learning offers a different approach in which the system's algorithms are based on real-world observations and data. If the data in the environment evolves, then the machine learning model can quickly relearn from the data. A hand-coded solution would require a rewrite.

This post explores the machine learning platforms and tools for embedded systems supported in the STMicroelectronics STM32 microcontroller family.

Machine learning support within the STM32 microcontroller family

Machine learning can be a powerful tool for embedded systems developers. However, developers often believe that machine learning algorithms require too much processing power or that the algorithms are so large that they won't fit within a typical microcontroller. In reality, the microcontroller selected for use will depend more on what you are trying to do with machine learning than on how any specific microcontroller supports it.

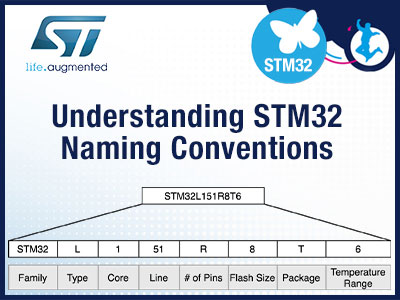

For example, Figure 1 shows a range of STM32 microcontroller families that support machine learning. These parts range from the STM32F0, which runs at 48 megahertz (MHz) and has at most 256 kilobytes (Kbytes) of flash and 32 Kbytes of RAM, to the STM32F7, which runs at 216 MHz and has at most 2 megabytes (Mbytes) of flash and 512 Kbytes of RAM.

Figure 1: The STM32 microcontrollers that support machine learning using STM32Cube.AI and NanoEdge development tools. (Image source: STMicroelectronics)

Figure 1: The STM32 microcontrollers that support machine learning using STM32Cube.AI and NanoEdge development tools. (Image source: STMicroelectronics)

As you can see, quite a few microcontrollers can support machine learning. The real problem is that machine learning platforms must successfully support a wide range of developers. For example, having a machine learning expert on staff is not very common for embedded software teams. Instead, embedded software developers are forced to become versed in machine learning outside their traditional skillset. Therefore, a platform to support teams with and without machine learning experts is needed. The STM32 Machine Learning ecosystem's toolchains help with this problem.

Help for embedded teams without machine learning experts

For sure, designing and training machine learning algorithms can seem like a daunting task. Developers need to be able to acquire data, design a model, train the model, and then ensure the model fits well enough to be optimized and deployed to an embedded system.

Traditionally, machine learning models are created using TensorFlow Lite, PyTorch, Matlab, or other tools. These tools are often well outside the embedded software developers' comfort zone or experience. Coming up to speed on these tools and getting accurate results is not just time-consuming but also costly.

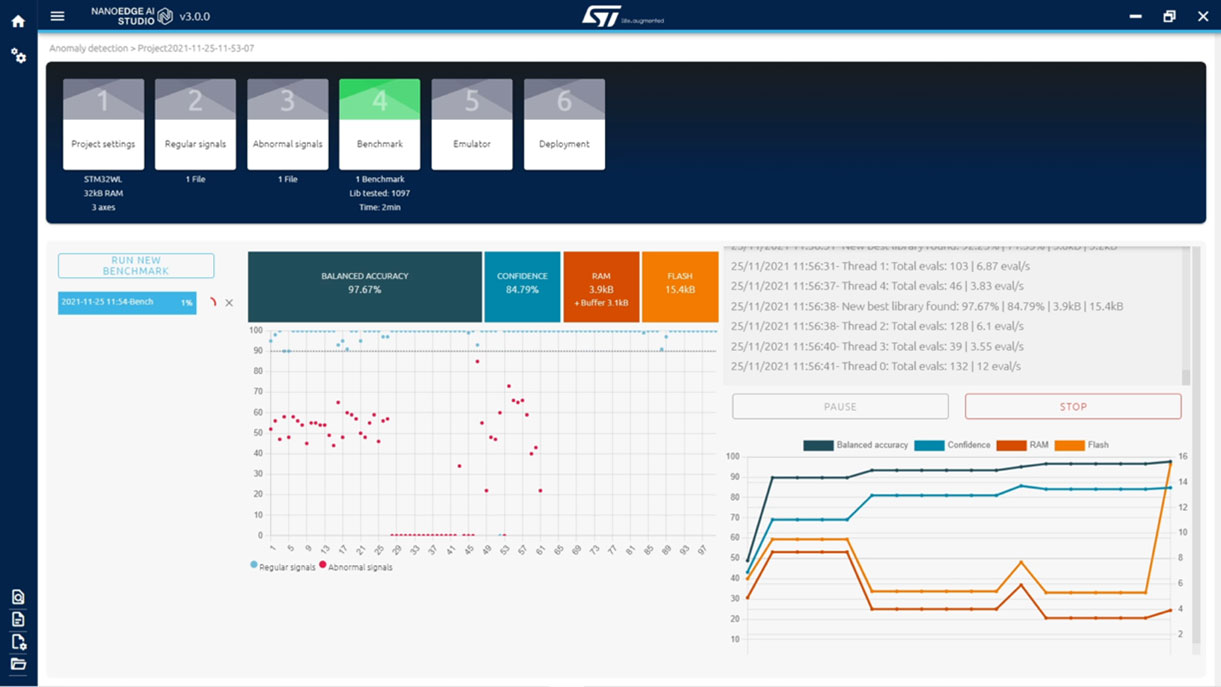

The STM32 machine learning ecosystem includes a tool called NanoEdge (Figure 2) to help developers without machine learning expertise or experience train and deploy machine learning applications to their devices.

For example, developers can easily create machine learning libraries for applications such as anomaly detection, outlier detection, classification, and regression. These libraries can then be deployed to an STM32 microcontroller.

Figure 2: NanoEdge can walk developers through the entire machine learning development process. (Image source: STMicroelectronics)

Figure 2: NanoEdge can walk developers through the entire machine learning development process. (Image source: STMicroelectronics)

What about embedded teams with machine learning experts?

When a development team has access to machine learning experts, they will access a much wider variety of tools to develop their machine learning models for an STM32 microcontroller. For example, when expertise is available, the team can use TensorFlow Lite, PyTorch, Matlab, or some other tool to create the model. The problem that often arises is that these tools will produce an unoptimized library that doesn’t run very efficiently on a microcontroller.

Within the STM32 family, developers can leverage the STM32Cube.AI plug-in to import machine learning models and optimize them to run efficiently on STM32 microcontrollers. The tool allows developers to run and tune their machine learning models on the target microcontroller. First, developers can import their model into the toolchain, as shown in Figure 3. Then, they can convert the model, analyze the network, and validate it. Once this has been done, developers can generate code from within the STM32CubeIDE, creating a machine learning framework around the model to simplify the embedded software development.

Figure 3: A sinewave generator machine learning model is imported into STM32CubeIDE.AI. (Image source: Beningo Embedded Group)

Figure 3: A sinewave generator machine learning model is imported into STM32CubeIDE.AI. (Image source: Beningo Embedded Group)

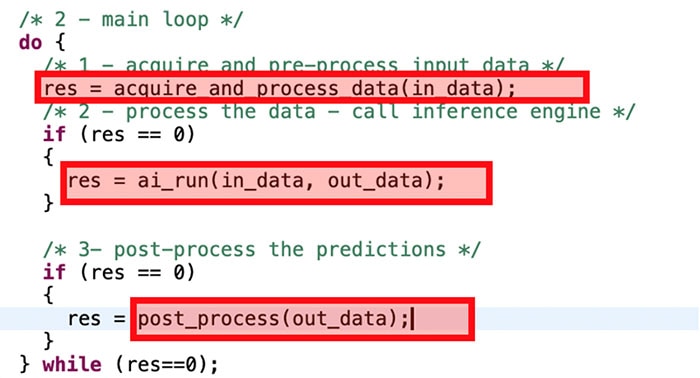

Embedded software developers must provide the appropriate inputs to the machine learning model, as well as the code to check the result. Actions can be performed on the result, or the result could be averaged or manipulated in whatever way makes sense for the application. Figure 4 shows the simple application loop to run and check the results of the model.

Figure 4: The main loop surrounds the machine learning model. (Image source: Beningo Embedded Group)

Figure 4: The main loop surrounds the machine learning model. (Image source: Beningo Embedded Group)

Conclusion

Machine learning, boosted by the TinyML effort, is quickly finding its way into embedded applications. To be successful, developers need to leverage a platform. While it seems platform options are emerging almost daily, the STM32 ecosystem provides developers with a simple and scalable solution path.

Within the ecosystem, developers with machine learning experience can leverage their traditional tools and use the STM32CubeIDE.AI plug-in to optimize and tune their solutions. For teams without machine learning expertise, NanoEdge can be leveraged to simplify machine learning library development and get the solution up and running quickly and cost-effectively.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum