Release the Kraken: Easily Bring Artificial Intelligence to Any Industrial System

There is widespread interest in deploying artificial intelligence (AI) and machine learning (ML) applications in industrial environments to increase productivity and efficiency while also achieving savings in operating costs. However, as any engineer or engineering manager will tell you, there are three major issues that need to be addressed with regard to adding "smarts" to an installed base of “dumb” machines, from motors to HVAC systems.

First, there aren’t enough people with AI and ML expertise to satisfy the demand, and what experts are available don’t come cheap. Second, there is a lack of qualified data sets with which to train the AI and ML systems, and any data sets that are available are jealously guarded. Third, AI and ML systems have traditionally demanded high-end processing platforms on which to run.

What is required is a way to enable existing engineers and developers without AI and ML experience to quickly create AI and ML systems and deploy them on efficient, low-cost microcontroller platforms. An interesting startup called Cartesiam.AI is addressing all these issues with its NanoEdge AI Studio. Let me explain how.

Quantifying the rise of AI and ML

By the middle of 2020, and depending on the source, there are expected to be between 20 and 30 billion edge devices worldwide,1,2 where the term "edge device" refers to connected devices and sensors located at the edge of the internet where it interfaces with the real world. Of these, only around 0.3% will be augmented with AI and ML capabilities. It is further estimated that there will be anywhere between 40 and 75 billion such devices by 2025,3,4 by which time it is expected that at least 25% will be required to be equipped with AI and ML capabilities.

A major factor with regard to industrial deployments is to take existing "dumb" machines and make them "smart" by augmenting them with AI and ML capabilities. It's difficult to overstate the potential here; for example, it is estimated that there is $6.8 trillion of existing dumb (legacy) infrastructure and machinery in the USA alone.5

How to do AI and ML more efficiently at the edge

The Internet of Things (IoT) and the Industrial IoT (IIoT) are already pervasive and objects are already becoming connected—the next challenge is to make these objects smart.

The traditional way to create an AI/ML application is to define a neural network architecture, including number of neural layers, number of neurons per layer, and the ways in which the various neurons and layers are connected together. The next step is to access a qualified data set (which may itself have taken vast amounts of time and resources to create). The data set is used to train the network in the cloud (i.e., using large numbers of high-end servers with humongous computational capabilities). Finally, the trained network is translated into a form suitable for deployment in the edge device.

According to the IBM Quant Crunch Report,6 data science and analytics (DSA) are no longer buzzwords; rather, they are essential business tools. However, there is growing concern that the supply of people with DSA skills is lagging dangerously behind demand, with the shortfall of data scientists currently running at 130,000 in the USA alone.

Unfortunately, lack of access to skilled data scientists and qualified data sets are obstacles to the rapid and affordable creation of AI/ML-enabled smart objects. According to Cisco,7 the failure rate for IoT projects in general is around 74%, and this failure rate increases with regard to AI/ML-enabled projects.

According to the IDC,8 there are around 22 million software developers in the world. Of these, approximately 1.2 million focus on embedded systems, and of these, only around 0.2% have even minimal AI/ML skills.

Some AI and ML systems, such as machine vision performing object detection and identification, demand the use of special, high-end computational devices, including graphics processing units (GPUs) and/or field-programmable gate arrays (FPGAs). However, new developments in AI/ML technologies mean that the vast majority of non-vision AI/ML applications can be deployed on the relatively low-end microcontrollers that are prominent in embedded systems.

In 2020, according to Statista,9 global shipments of microcontrollers are expected to amount to approximately 28 billion units (that's around 885 each second), making microcontroller-based platforms the most pervasive hardware on the market. With their low cost and low power consumption, microcontrollers are the perfect platform to bring intelligence to the edge.

Even for large enterprise organizations with access to data scientists and data sets and essentially unlimited budgets, it's difficult to become proficient in AI and ML. For smaller companies, it may well be impossible. If the situation stays "as-is", there's no way 25% of edge devices will be augmented with AI/ML capabilities by 2025. If only existing developers of microcontroller-based embedded systems were equipped to develop AI/ML applications...

A simple, rapid, and affordable way to develop AI/ML-enabled smart objects

The most ubiquitous computational platform for embedded applications in industrial environments is the microcontroller, and no microcontroller is more ubiquitous than Arm's Cortex-M family, especially the M0, M0+, M3, M4, and M7.

Figure 1: The V2M-MPS2-0318C is a powerful development platform for Arm Cortex-M-based applications with lots of I/O and an LCD display. (Image source: Arm)

Figure 1: The V2M-MPS2-0318C is a powerful development platform for Arm Cortex-M-based applications with lots of I/O and an LCD display. (Image source: Arm)

One thing to which companies do have access is traditional embedded developers. What is required is some way to make these developers act like AI/ML experts without having to train them. The ideal solution would be to give traditional embedded developers the capability to quickly and easily create self-aware machines that can automatically learn and understand their environment, identify patterns and detect anomalies, predict issues and outcomes, and do all this on affordable microcontroller-based platforms at the edge where the data is generated and captured.

The solution, as I alluded to earlier, is NanoEdge AI Studio from Cartesiam.AI. Using this integrated development environment (IDE), which runs on Windows 10 or Linux Ubuntu, the embedded developer first selects the target microcontroller, ranging from an Arm Cortex-M0 to M7. Also, the developer or designer specifies the maximum amount of RAM to be allocated to the solution. If you’re a bit rusty, or are new to all this, a good place to (re)start is with the V2M-MPS2-0318C Arm Cortex-M Prototyping System+ (Figure 1).

The V2M-MPS2-0318C is part of the Arm Versatile Express range of development boards. It comes with a relatively large FPGA for prototyping Cortex-M-based designs. To that end, it comes with fixed encrypted FPGA implementations of all the Cortex-M processors. Also, it has quite a few useful peripherals including PSRAM, Ethernet, touchscreen, audio, a VGA LCD, SPI, and GPIO.

Next, the developer needs to select the number and types of sensors to be used; the beauty of the Cartesiam.AI approach is that there are no hard limitations on the sensors that can be used. For example, they can include:

- One, two and three-axis accelerometers (basically any frequency for vibration analysis)

- Magnetic sensors

- Temperature sensors

- Microphones (for sound recognition)

- Hall effect sensors for motor control

- Current monitoring sensors

It's important to note that the developer is not required to define specific part numbers—just the general sensor types.

The next step is to load contextual sensor data; that is, generic data associated with each sensor to give the system an idea what it's going to be dealing with.

NanoEdge AI Studio is equipped with an extensive suite of AI/ML "building blocks" that can be used to create solutions for 90% or more of industrial AI/ML tasks. Once it has been informed of the target microcontroller, the number and types of sensors, and the generic type of sensor data it can expect to see, it will generate the best AI/ML library solution out of 500 million possible combinations.

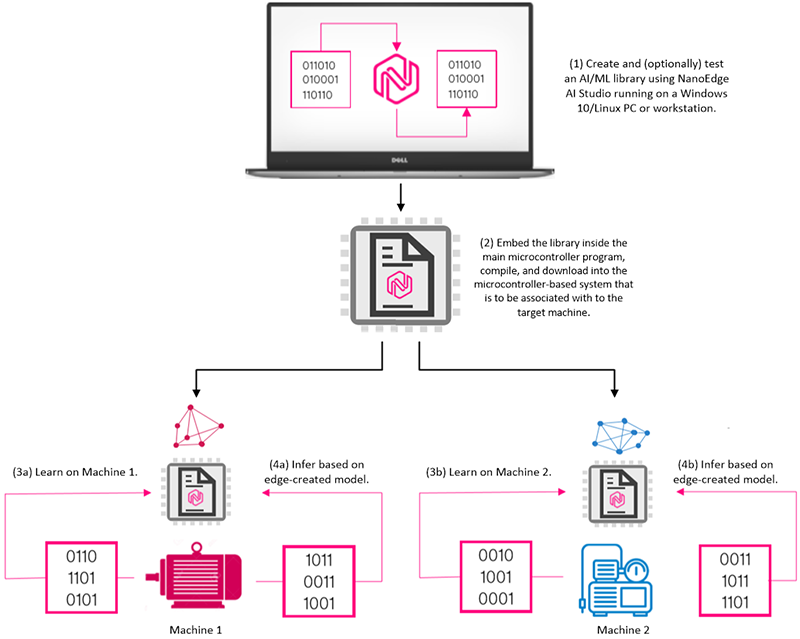

If the developer wishes, this solution may optionally be tested on the same PC running that NanoEdge AI Studio IDE by means of an included emulator, after which it is embedded inside the main microcontroller program, compiled, and downloaded into the microcontroller-based system that is to be associated with the target machine.

Purely for the sake of an example, let's assume that we have two dumb machines that we wish to make smart. One of these machines might be a pump, while the other might be a generator. Also, for the sake of this example, let's assume that we are creating a single solution using a temperature sensor and a 3-axis accelerometer, and that the same solution will be deployed on both machines (Figure 2).

Figure 2: After NanoEdge AI Studio IDE has been used to create and (optionally) test an AI/ML library, that library is embedded inside the main program, compiled, and downloaded into the microcontroller-based system that is to be associated with the target machine(s). Following a learning phase (typically a week of running 24 hours per day), the inference engine can be used to spot and report anomalies and predict future outcomes. (Image source: Max Maxfield)

Figure 2: After NanoEdge AI Studio IDE has been used to create and (optionally) test an AI/ML library, that library is embedded inside the main program, compiled, and downloaded into the microcontroller-based system that is to be associated with the target machine(s). Following a learning phase (typically a week of running 24 hours per day), the inference engine can be used to spot and report anomalies and predict future outcomes. (Image source: Max Maxfield)

Of course, these two machines will have completely different characteristics. In reality, two otherwise identical machines may have very different characteristics depending on their location and environment. For example, two identical pumps located 20 meters apart in the same room of the same factory may exhibit different vibration profiles depending on where they are mounted (one on concrete, the other above wooden floor joists) and the lengths (and shapes and materials) of the pipes to which they are connected.

The key to the whole process is that the AI/ML solutions are individually trained on known good machines, where this training typically takes a week running non-stop, 24 hours per day, thereby allowing the system to learn from temperature fluctuations and vibration patterns. Additional training sessions can of course be performed at later dates to fine-tune the models to account for environmental variations associated with different seasons (for external applications) and other expected variables.

Once the solutions have been trained, they can begin to infer from any new data that comes in, identifying patterns and detecting anomalies, predicting issues and outcomes, and presenting their conclusions to a dashboard for both technical and managerial analysis as needed.

Conclusion

I see NanoEdge AI Studio as a "game changer". It’s intuitive and will allow designers of embedded systems using low-power, low-cost Arm Cortex-M microcontrollers — which are embedded in billions of devices worldwide — to quickly, easily and inexpensively integrate AI/ML into their industrial systems, transforming dumb machines into smart machines, thereby increasing productivity and efficiency while also achieving much anticipated savings in operating costs.

References

1: https://www.vxchnge.com/blog/iot-statistics

2: https://securitytoday.com/articles/2020/01/13/the-iot-rundown-for-2020.aspx

3: https://www.helpnetsecurity.com/2019/06/21/connected-iot-devices-forecast/

4: https://securitytoday.com/articles/2020/01/13/the-iot-rundown-for-2020.aspx

5: https://www.kleinerperkins.com/perspectives/the-industrial-awakening-the-internet-of-heavier-things/

6: https://www.ibm.com/downloads/cas/3RL3VXGA

7: https://newsroom.cisco.com/press-release-content?articleId=1847422

8: https://www.idc.com/getdoc.jsp?containerId=US44363318

9: https://www.statista.com/statistics/935382/worldwide-microcontroller-unit-shipments/

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum