Giving Vehicles the Power of Sight with the Latest Camera Technologies

資料提供者:DigiKey 歐洲編輯群

2016-10-11

As camera systems become more prevalent in vehicles, there is increased demand for retrofit dashboard video recorders (dashcams), driver assistance and warning cameras, reversing cameras and night vision systems. As consumers request more functionality from these systems, a variety of new camera technologies have emerged, which can be used either on their own or alongside regular CMOS image sensors in complex sensor fusion applications.

At the same time, developing systems with these new technologies has never been easier thanks to the range of reference designs and development kits available to get you started. Here is a selection of technologies and kits we think are amongst the most promising for this challenging application.

Infra red cameras

Infra red cameras produce images based on wavelengths longer than visible light, that is, objects radiating heat will look brighter, and cold areas will look darker. This is very useful for machine vision systems because it can detect people or animals in the field of vision, perhaps pedestrians, pets or wild animals on the road around a vehicle. They are especially effective when used to augment noisy data from visible light cameras, but IR cameras may also be used on their own to enable night vision systems that help identify pedestrians or animals when driving in the dark, or when reversing into a dark garage. Inside the car, infrared cameras can detect the presence of pets or children to stop them being inadvertently left in a hot vehicle, or used for gesture recognition in camera-based control systems.

Infra red cameras in the form of CMOS image sensors that detect long wave infra red (LWIR) have been on the market for some years, but until recently, they were relatively bulky, because of the physical size limits imposed by the wavelengths being detected. Today’s processing technologies and miniaturization techniques have resulted in tiny devices which are around a tenth of the price of traditional thermal cameras, enabling their widespread use in consumer electronics and aftermarket vehicle imaging systems.

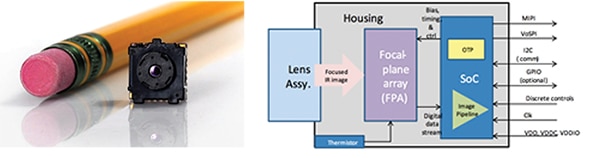

Figure 1: (a) FLIR Lepton miniature IR camera. (b) Block diagram for the Lepton camera module.

FLIR Systems’ Lepton infrared camera is the perfect example of this trend. Cutting edge manufacturing techniques allow low-cost lenses to be built at the wafer level during the same process as the imager itself, reducing the number of process steps. Wafer-level packaging, combined with single-chip integrated control electronics which includes DSP and SPI interfaces, keeps the device’s footprint to a minimum – the 80 x 60 pixel camera is small enough to fit onto the same 32-pin connector used by many mobile phone cameras today. Three models have the camera’s field of view set to 25° or 50°, either to view wider scenes or for longer range imaging.

FLIR’s evaluation board for this camera, the Lepton Thermal Camera Breakout board, allows very quick evaluation of these infrared camera modules, and it’s compatible with various ARM based development systems such as BeagleBoard and Raspberry Pi.

Time of flight 3D camera

Another technology coming to vehicle cameras is Time of Flight (TOF) 3D image sensing, thanks to advancements in integration and miniaturization. These complex devices effectively map 3D surfaces with accuracy in the order of millimetres. Modulated infrared light from a laser LED is emitted and the sensor detects its reflection. The phase shift between the illuminating light and the reflected light is measured and used to calculate the distance between objects in the field of view and the sensor. This calculation is performed for each pixel and the results are used to create a point-map of three-dimensional image data.

Compared to other methods of 3D imaging, such as using two cameras for stereo vision or projecting structured light patterns onto the scene and measuring the distortion, TOF compares favorably in terms of physical compactness, response time, and simplicity. It is also relatively unaffected by changing ambient light conditions.

It’s easy to imagine how TOF 3D cameras could be used in an automotive setting to provide more sophisticated data for reversing camera systems which would be able to discern the distance between the reversing car and any obstacles it its path. TOF 3D imaging could also be used in autonomous driving applications to help the car understand scenes (as it helps to separate the background from the foreground), or inside the car for facial recognition or gesture detection systems.

Figure 2: (a) Block diagram for TI’s camera development kit for its TOF sensor. (b) The sensor board.

The leading TOF sensor on the market comes from Texas Instruments: the OPT8241. This QVGA format sensor (320 x 240 resolution) has a maximum frame rate of 150 fps and it supports high pixel modulation frequency (> 50 MHz) and up to 5 x increase in signal-to-noise ratio. Designed to work with this IC is the OPT9221 TOF controller, which includes a programmable timing generator to control the light modulation, readout and digitization sequence for the images. Since it’s programmable, the timing generator can be optimized for a number of variables, including ambient light cancellation and motion robustness, and it can also be programmed with a region of interest.

TI’s camera development kit for TOF sensors (Figure 2) features the OPT8241 image sensor, plus the OPT9221 TOF controller and an analog front end (the VSP5324). With this setup, frame rates up to 60 fps are possible, and the field of view is 75 ° (horizontal) and 60 ° (vertical). It can map objects up to 4 meters away, so it’s perfect for warning the driver they are about to reverse into a post.

Facial Recognition

The combination of the latest camera technology with inexpensive, compact processors has led to the development of facial recognition boards, such as the Omron B5T HVC (human vision component). Omron’s board can recognise individual faces and gestures and will even try and guess the emotion of the faces detected, using a complex proprietary algorithm. Recognizing individuals is a useful feature in vehicles, perhaps used to detect who is driving and adjust the radio to their favorite station, or pre-load the navigation system with their favorite places. Or to tell when kids are in the car and restrict the entertainment system to family-friendly content. Otherwise, it can simply be used to count the number of occupants and adjust the air conditioning accordingly.

Figure 3: Omron facial recognition board.

Omron’s algorithm also enables features such as detecting when the eyes are closed and even gaze direction estimation, already widely used in digital cameras to tell when the subject is looking at the camera. These features could potentially be useful in an aftermarket camera system that detects whether the driver is looking at the road or getting distracted by something inside the car, or sound an alarm to wake them up if they appear to be falling asleep.

Omron’s facial recognition board measures just 60 x 40 mm and draws less than 0.25 A. The board outputs easy to use numerical values for parameters such as user ID, blink degree for each eye, horizontal and vertical angle of gaze, estimated age, etc. as well as optionally, the captured image. Maximum distance from the camera is 1.3 metres for best performance.

Dual Dashboard Cameras

Dashboard or windshield-mounted cameras that record what’s happening outside the car are very popular in the market, as they are frequently used as ‘black boxes’, that is, the footage is be used to determine who was at fault in the event of an accident. As consumer acceptance of these systems grows, they are gaining features such as lane departure warning or forward collision warning capability, thanks to image processing. As they become more sophisticated, some are using two cameras, either for stereoscopic 3D vision for object detection, or simply for front and rear views (through the front and back windows).

Figure 4: (a) Block diagram for Lattice dual-input reference design. (b) The reference design constructed using the MachX02 dual image sensor interface board.

The challenge with dual-camera input systems is maintaining image quality while keeping the cost down. While some systems use two camera modules and two image processors, or a more expensive image processor that can handle two inputs, in fact, most image processors can support data from two image sensors, even though they have only been designed for one input. Lattice has come up with a reference design for dual camera systems using its MachX02 programmable logic device as a bridge (Figure 4). This design allows both cameras to interface with one image processor, keeping parts count and cost down. It can synchronize, merge and convert serial sensor interfaces to a CMOS parallel bus.

This reference design can be constructed using the MachX02 dual image sensor interface board, the 9MT024 Sensor NanoVesta Headboard and the HDR-60 Development Kit. The Lattice device deserializes the two sensor interfaces, and then combines the image with assistance of SDRAM for frame buffering. The output is a parallel bus of the combined images so an image signal processor can process the stream.

As the industry continues to make progress in intelligent camera systems for autonomous vehicles, some of that technology is filtering down to ordinary cars. With new camera technologies as well as complex image processing solutions becoming more widely available, these innovations can be used in retrofit camera systems which add a level of intelligence to existing vehicles, improving comfort, entertainment and safety.

聲明:各作者及/或論壇參與者於本網站所發表之意見、理念和觀點,概不反映 DigiKey 的意見、理念和觀點,亦非 DigiKey 的正式原則。