OpenCV LK Homography Target Tracking Using UNIHIKER

2025-08-18 | By DFRobot

Introduction

This project is to learn OpenCV LK Homography Target Tracking Using UNIHIKER. Connect an external USB camera to UNIHIKER and use the camera to detect the target and track it.

Project Objectives

Learn how to use the lk homography method of the OpenCV library to detect the target and track it.

Hardware List

- 1 UNIHIKER - IoT Python Single Board Computer with Touchscreen

- 1 Type-C&Micro 2-in-1 USB Cable

- 1 USB camera

Software

Practical Process

1. Hardware Setup

Connect the camera to the USB port of UNIHIKER.

![]()

Connect the UNIHIKER board to the computer via USB cable.

![]()

2. Software Development

Step 1: Open Mind+, and remotely connect to UNIHIKER.

![]()

Step 2: Find a folder named "AI" in the "Files in UNIHIKER". And create a folder named "OpenCV lk_homography target tracking based on UNIHIKER" in this folder. Import the dependency files for this lesson.

![]()

Step 3: Create a new project file in the same directory as the above file and name it "main.py".

Sample Program:

Code

#!/usr/bin/env python

'''

Lucas-Kanade homography tracker

===============================

...

'''

# Python 2/3 compatibility

from __future__ import print_function

import numpy as np

import cv2 as cv

import video

from common import draw_str

from video import presets

lk_params = dict( winSize = (19, 19),

maxLevel = 2,

criteria = (cv.TERM_CRITERIA_EPS | cv.TERM_CRITERIA_COUNT, 10, 0.03))

feature_params = dict( maxCorners = 1000,

qualityLevel = 0.01,

minDistance = 8,

blockSize = 19 )

def checkedTrace(img0, img1, p0, back_threshold = 1.0):

p1, _st, _err = cv.calcOpticalFlowPyrLK(img0, img1, p0, None, **lk_params)

p0r, _st, _err = cv.calcOpticalFlowPyrLK(img1, img0, p1, None, **lk_params)

d = abs(p0-p0r).reshape(-1, 2).max(-1)

status = d < back_threshold

return p1, status

green = (0, 255, 0)

red = (0, 0, 255)

class App:

def __init__(self, video_src):

self.cam = self.cam = video.create_capture(video_src, presets['book'])

self.p0 = None

self.use_ransac = True

cv.namedWindow('lk_homography',cv.WND_PROP_FULLSCREEN) #Set the windows to be full screen.

cv.setWindowProperty('lk_homography', cv.WND_PROP_FULLSCREEN, cv.WINDOW_FULLSCREEN) #Set the windows to be full screen.

def run(self):

while True:

_ret, frame = self.cam.read()

frame_gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

vis = frame.copy()

if self.p0 is not None:

p2, trace_status = checkedTrace(self.gray1, frame_gray, self.p1)

self.p1 = p2[trace_status].copy()

self.p0 = self.p0[trace_status].copy()

self.gray1 = frame_gray

if len(self.p0) < 4:

self.p0 = None

continue

H, status = cv.findHomography(self.p0, self.p1, (0, cv.RANSAC)[self.use_ransac], 10.0)

h, w = frame.shape[:2]

overlay = cv.warpPerspective(self.frame0, H, (w, h))

vis = cv.addWeighted(vis, 0.5, overlay, 0.5, 0.0)

for (x0, y0), (x1, y1), good in zip(self.p0[:,0], self.p1[:,0], status[:,0]):

if good:

cv.line(vis, (int(x0), int(y0)), (int(x1), int(y1)), (0, 128, 0))

cv.circle(vis, (int(x1), int(y1)), 2, (red, green)[good], -1)

draw_str(vis, (20, 20), 'track count: %d' % len(self.p1))

if self.use_ransac:

draw_str(vis, (20, 40), 'RANSAC')

else:

p = cv.goodFeaturesToTrack(frame_gray, **feature_params)

if p is not None:

for x, y in p[:,0]:

cv.circle(vis, (int(x), int(y)), 2, green, -1)

draw_str(vis, (20, 20), 'feature count: %d' % len(p))

cv.imshow('lk_homography', vis)

ch = cv.waitKey(1)

if ch == 27:

break

if ch == ord('a'):

self.frame0 = frame.copy()

self.p0 = cv.goodFeaturesToTrack(frame_gray, **feature_params)

if self.p0 is not None:

self.p1 = self.p0

self.gray0 = frame_gray

self.gray1 = frame_gray

if ch == ord('b'):

self.use_ransac = not self.use_ransac

def main():

import sys

try:

video_src = sys.argv[1]

except:

video_src = 0

App(video_src).run()

print('Done')

if __name__ == '__main__':

print(__doc__)

main()

cv.destroyAllWindows()

3. Run and Debug

Step 1: Run the main program

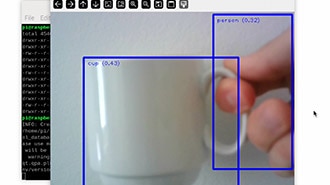

Run the "main.py" program, put Mind+ into the screen, Mind+ will be detected and marked with a green light point. After moving gently, it will still be on the screen. At the same time, you can see the number of marked feature points on the top of the screen.

![]()

4. Program Analysis

In the above "main.py" file, we mainly call the camera through the opencv library to get the real-time video stream, and then with the help of the lk homography algorithm in computer vision to realize the real-time tracking of the feature points in the video, and draw the motion trajectory of these feature points on the video frame, the overall process is as follows.

① Initialization: when the program starts, it creates an instance of the App class, opens the video source, and creates a full-screen window for display.

② Main loop: the program enters an infinite loop, each loop reads a frame of video and then performs the following processing:

If the initial feature points have been found (i.e. self.p0 is not None), then the program will use the Lucas-Kanade optical flow method to track the positions of these feature points in the current frame, and then find the uni-responsive matrix based on the positions of the feature points in the previous and previous frames, and then map the initial frame to the current frame using this matrix to form a superimposed image.

If the initial feature point is not found yet, then the program will find a good feature point in the current frame and mark it.

③User interaction: the program will check the user's keyboard input, if key 'a' is pressed then the program will find good feature points in the current frame as initial feature points; if key 'b' is pressed then the program will toggle whether or not to use the RANSAC algorithm to compute the uni-responsiveness matrix; if key 'ESC' is pressed then the program will exit.

④ End: when the main loop ends, the program closes all windows and exits.

The main goal of this program is to track the feature points in the video and stabilize the scene by finding the single responsiveness between the two frames before and after. This is accomplished by tracking feature points in each frame and then using those feature points to compute a monoaccuracy matrix, which is then used to map the initial frame to the current frame to form a superimposed image.

Knowledge analysis

lk homography

"Lucas-Kanade" (LK) and "Homography" are two terms that represent different concepts, but in computer vision and image processing, they are often used jointly to achieve specific functions.

Lucas-Kanade (LK):

Lucas-Kanade (LK) is an optical flow estimation algorithm. Optical flow is the pixel-wise variation of a spatially moving object in the viewing imaging plane, which describes the motion of moving objects in an image sequence. The Lucas-Kanade method is based on a least-squares fit to a localized window of pixels. The LK algorithm assumes that all pixels within the window have the same motion. In practice, the LK algorithm is often used to track feature points in a video.

Homography:

Homography is a transformation that describes a mapping relationship between two planes. In computer vision, Homography is often used for tasks such as image alignment and perspective transformation. For two images, if we know the feature point correspondences between them, we can compute the Homography between these two images and then use this Homography to map one image to the other.

When we talk about "LK Homography", we usually refer to the combination of using the LK algorithm and Homography to realize certain functions, such as feature point tracking and image alignment. Specifically, the LK algorithm can be used to track feature points in a video sequence, then use these feature points to compute the Homography between the previous and current frames, and finally use this Homography to realize the image alignment or overlay.

project.zip8KB

Feel free to join our UNIHIKER Discord community! You can engage in more discussions and share your insights!