On the Road to Embedded World 2021: Episode 5

Editor’s Note: In the first blog in this series of five blogs leading up to Embedded World 2021, Episode 1, an overview of what Embedded World was presented. In Episode 2, Randall brushed up on his C programming language. Episode 3, focused on how using Object-Oriented Programming can reduce complexity. Episode 4 showed how the fundamental measure of good design is its ability to be reconfigured as requirements change without having to reimplement the building blocks. In this final blog, Episode 5, the ever expanding space required by operating systems is questioned and system decomposition is touched upon prior to Randall’s keynote presentation at Embedded World 2021.

In my last post, I presented David Parnas’ view that a system should hide information behind its interface and that systems should be decomposed into modules that readily accommodate change. I also mentioned a modern-day advocate of this approach in that of Juval Löwy who wrote the book Righting Software.

This approach is different from functional decomposition which is arguably the more popular approach used today. Yet, there is good evidence that Parnas’ approach is better. Among these reasons is that a system designed for change is a system more easily comprehended. It’s easier to “grok” such a system and it is easier to recompose such systems into new implementations. This is a key concept to increase reuse because it enables non-experts’ access to others’ expertise. It is also the opportunity to broaden embedded engineers’ markets by creating leverage for others to make what they envision. So, the fundamental measure of good design is its ability to be reconfigured as requirements change without having to reimplement the building blocks. In this way, variation of core use-cases are simply interactions between building blocks designed for specific use-cases and specific requirements.

But alas, there are liabilities and hazards to this approach. Among them are inefficiency and waste. As systems grow, they naturally become unwieldy. I invite you to read this interesting blog by someone named Nikita. I am happy to report that it is still available online as of week 53 of 2020. Nikita says:

”An Android system with no apps takes up almost 6 GB. Just think for a second about how obscenely HUGE that number is. What’s in there, HD movies? I guess it’s basically code: kernel, drivers. Some string and resources too, sure, but those can’t be big. So, how many drivers do you need for a phone?

Windows 95 was 30 MB. Today we have web pages heavier than that! Windows 10 is 4 GB, which is 133 times as big. But is it 133 times as superior? I mean, functionally they are basically the same. Yes, we have Cortana, but I doubt it takes 3970 MB. But whatever Windows 10 is, is Android really 150% of that?

Apple’s M1 chip announced in November 2020 contains 16-billion transistors. This is enormous by today’s standards. There are some huge FPGAs available to embedded designers, too. Whereas general purpose computer hardware and programs have gotten unwieldy, the same is happening in the embedded space.

Digital signal processing was developed as early as the 1960s and it was used to process radar signals. This was before the advent of the microprocessor and programmable logic. What’s happened since then is that we’ve adopted the use of functionality that someone else developed - IP blocks from the FPGA vendor and his value added resellers and software libraries of all sorts. It’s made us more productive but it’s also made our solutions larger. The issue is that your application may not need all of the included functionality and you don’t likely know about all the functionality that is included (e.g.: built in test suites the supplier has embedded in the IC you are using). It’s become simpler to bundle up canned functionality and include it in the end product. It doesn’t pay to figure out a tighter solution because it takes longer to implement a solution.

I mentioned in my last post Löwy’s comment that an elephant and a mouse basically share the same architecture. This reminds me that my DNA carries most of the genealogy of a banana (90%) and a fruit fly (95%). Even though I carry these formulas with me, I’m oblivious to them. I think this is happening to embedded systems. We’re just carrying along the extra baggage of readily available functionality. We are architecting our embedded systems with frameworks that look like they came from IT departments (e.g.: Microchip’s Harmony, Texas Instruments’ xDAIS, NXP’s MCUXpresso, and more).

I recently read an article by Kevin Morris in EE Journal saying that:

“Neither Xilinx nor Altera/Intel, despite hovering around 80% combined FPGA market share for the last couple of decades, has shipped the most FPGA devices. That distinction goes to Lattice Semiconductor, and not by a small margin. The reason, of course, is that, in recent years, Lattice has focused on the mid-range and low-end segment of the market, while the better-known programmable logic companies have struggled for supremacy in the largest, most expensive FPGAs, FPGA-SoCs, and similar components.

This strategic emphasis on lower-cost, higher-volume sockets has helped Lattice deliver billions of FPGAs into a wide range of systems across numerous market segments. And, as the capabilities of FPGA technology have increased, Lattice has drafted behind the big two, bringing technology that was considered high-end just a few years ago into much more cost-constrained applications, and then they have taken the technology in new directions with pre-engineered solutions that significantly lower the bar for engineering teams without vast FPGA expertise to take advantage of the technology.”

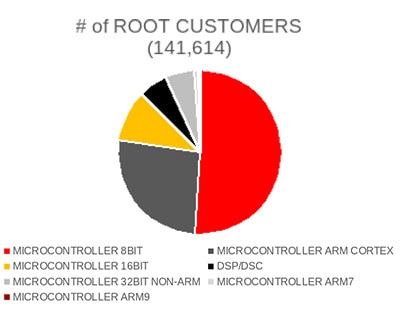

Key “microcontroller” into DigiKey’s search box and you will see they list over 87,000 unique devices. Key in “FPGA” and you will find they list over 25,000 devices. Now look at Figure 1. It shows the kinds of microcontrollers bought by customers. Notice that the greatest opportunity is serving the lower-end 8-bit market. In this day of ARM and RISC-V, you might find this surprising but it matches the article about Lattice.

Figure 1: Popularity of types of microcontrollers

Figure 1: Popularity of types of microcontrollers

Our challenge, as embedded engineers, is to resist the temptation to inflate our functionality. Actel, now Microsemi which is a division of Microchip, had a tool called a gate gobbler. For all I know it still exists. This tool would eliminate superfluous logic. We might need to resurrect such tools to allow our users to cleave unneeded functionality.

My Embedded World presentation will provide an example of system decomposition using Parnas’ approach and I will argue that the market for embedded engineers is broad and growing. I will explain the perspective of reducing the complexity to use someone’s functionality to benefit both novices and experts alike. I will argue this opportunity makes for a fantastic future. I will also explain what the programmable logic companies have done to hurt their markets and what needs to be done to correct things.

It’s important for companies to make their products usable by the largest audiences. Early in my career, one of the US automotive companies had a massive layoff. I remember reading an article that explained, in that number of people laid off, probability says they laid off at least some Edisons, Fords, and a DaVinci or two. It’s in large, unskilled markets where the next DaVinci will start his or her career. We need those people to successfully leverage our expertise.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum