How to Optimize Intra Logistics to Streamline and Speed Industry 4.0 Supply Chains – Part Two of Two

Contributed By DigiKey's North American Editors

2023-09-22

Part 1 of this series on intra logistics discussed issues related to how autonomous mobile robots (AMRs) and automated guided vehicles (AGVs) are used on a system level for implementing intra logistics and quickly and safely moving materials as needed. This article focuses on use cases and how AMRs and AGVs employ sensors to identify and track items, how machine learning (ML) and artificial intelligence (AI) support material identification, movement and delivery of materials throughout warehouse and production facilities.

Intra logistics (internal logistics) uses autonomous mobile robots (AMRs) and automated guided vehicles (AGVs) to efficiently move materials around Industry 4.0 warehouses and production facilities. To streamline and speed supply chains, intra logistics systems need to know the current location of material, the intended destination of material, and the safest, most efficient path for the material to reach the destination. This streamlined navigation requires a diversity of sensors.

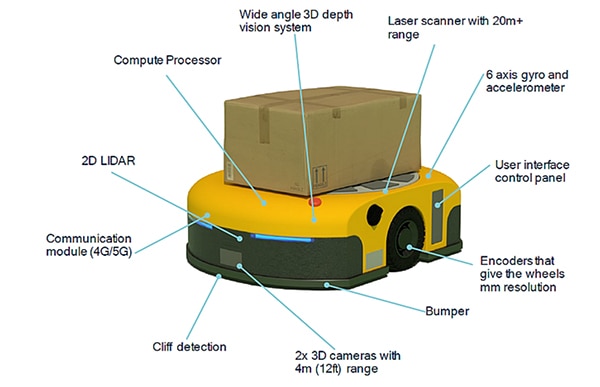

In intra logistics solutions, AGVs and AMRs use sensors to increase their situational awareness. Arrays of sensors provide safety for nearby personnel, protection of other equipment, and efficient navigation and localization. Depending on application requirements, sensor technologies for AMRs can include contact sensors like limit switches built into bumpers, 2D and 3D light detection and ranging (LiDAR), ultrasonics, 2D and stereo cameras, radar, encoders, inertial measurement units (IMUs), and photocells. For AGVs, sensors can include magnetic, inductive, or optical line sensors, as well as limit switches built into bumpers, 2D LiDAR, and encoders.

The first article of this series covers issues related to how AMRs and AGVs are used at a system level for implementing intra logistics and efficiently moving materials as needed.

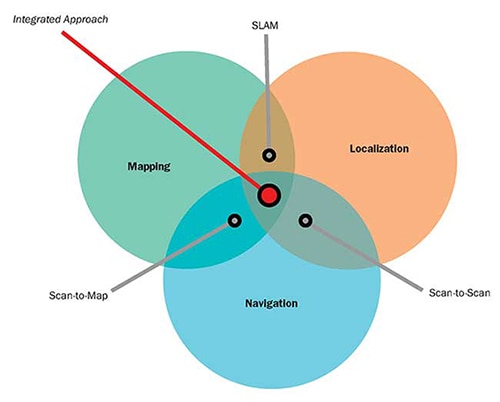

This article is focused on sensor fusion and how AMRs and AGVs employ combinations of sensors plus AI and ML for localization, navigation, and operational safety. It begins with a brief review of common sensors found in AGVs, examines robot pose and simultaneous location and mapping (SLAM) algorithms using sensor fusion, considers how SLAM estimates can be improved with scan-to-map matching and scan-to-scan matching techniques, and closes with a look at how sensor fusion contributes to safe operation for AMRs and AGVs. DigiKey supports designers with a wide range of sensors and switches for robotics and other industrial applications in all these cases.

A range of sensors and sensor fusion, AI, ML, and wireless connectivity are needed to support autonomous operation and safety in AMRs. While the performance demands for AGVs are lower, they still rely on multiple sensors to support safe and efficient operation. There are two overarching categories of sensors:

- Proprioceptive sensors measure values internal to the robot like wheel speed, loading, battery charge, and so on.

- Exteroceptive sensors provide information about the robot's environment like distance measurements, landmark locations, and obstacle identification such as people entering the robot's path.

Sensor fusion in AGVs and AMRs relies on combinations of proprioceptive and exteroceptive sensors. Examples of sensors in AMRs include (Figure 1):

- Laser scanner for object detection with 20+ meter (m) range

- IMU with a 6-axis gyroscope and accelerometer, and sometimes including a magnetometer

- Encoders with millimeter (mm) resolution on the wheels

- Contact sensor like a microswitch in the bumper to immediately stop motion if an unexpected object is contacted

- Two forward-looking 3D cameras with a 4 m range

- Downward-looking sensor to detect the edge of a platform (called cliff detection)

- Communications modules to provide connectivity and can optionally offer Bluetooth angle of arrival (AoA) and angle of departure (AoD) sensing for real-time location services (RTLS) or 5G Transmission Points/Reception Points (TRP) to plot a grid with centimeter-level accuracy

- 2D LiDAR to calculate the proximity of obstacles ahead of the vehicle

- Wide angle 3D depth vision system suitable for object identification and localization

- High-performance compute processor on board for sensor fusion, AI, and ML

Figure 1: Exemplary AMR showing the diversity and positions of the embedded sensors. (Image Source: Qualcomm)

Figure 1: Exemplary AMR showing the diversity and positions of the embedded sensors. (Image Source: Qualcomm)

Robot pose and sensor fusion

AMR navigation is a complex process. One of the first steps is for the AMR to know where it is and what direction it's facing. That combination of data is called the robot's pose. The concept of pose can also be applied to the arms and end effectors of multi-axis stationary robots. Sensor fusion combines inputs from the IMU, encoders, and other sensors to determine the pose. The pose algorithm estimates the (x, y) position of the robot and the orientation angle θ, with respect to the coordinate axes. The function q = (x, y, θ) defines the robot’s pose. For AMRs, pose information has a variety of uses, including:

- The pose of an intruder, like a person entering close to the robot, relative to an external reference frame or relative to the robot

- The estimated pose of the robot after moving at a given velocity for a predetermined time

- Calculating the velocity profile needed for the robot to move from its current pose to a second pose

Pose is a predefined function in several robot software development environments. For example, the robot_pose_ekf package is included in the Robot Operating System (ROS), an open-source development platform. Robot_pose_ekf can be used to estimate the 3D pose of a robot based on (partial) pose measurements from various sensors. It uses an extended Kalman filter with a 6D model (3D position and 3D orientation) to combine measurements from the encoder for wheel odometry, a camera for visual odometry, and the IMU. Since the various sensors operate with different rates and latencies, robot_pose_ekf does not require all sensor data to be continuously or simultaneously available. Each sensor is used to provide a pose estimate with a covariance. Robot_pose-ekf identifies the available sensor information at any point in time and adjusts accordingly.

Sensor fusion and SLAM

Many environments where AMRs operate include variable obstacles that can move from time to time. Although a basic map of the facility is useful, more is needed. When moving around an industrial facility, AMRs need more than pose information; they also employ SLAM to ensure efficient operation. SLAM adds real-time environment mapping to support navigation. Two basic approaches to SLAM are:

- Visual SLAM that pairs a camera with an IMU

- LiDAR SLAM that combines a laser sensor like 2D or 3D LiDAR with an IMU

LiDAR SLAM can be more accurate than visual SLAM, but it is generally more expensive to implement. Alternatively, 5G can be used to provide localization information to enhance visual SLAM estimates. The use of private 5G networks in warehouses and factories can augment embedded sensors for SLAM. Some AMRs implement indoor precise positioning using 5G transmission points/reception points (TRP) to plot a grid for centimeter-level accuracy on x-, y-, and z-axes.

Successful navigation relies on an AMR's ability to adapt to changing environmental elements. Navigation combines visual SLAM and/or LiDAR SLAM, overlay technologies like 5G TRP, and ML to detect changes in the environment and provide constant location updates. Sensor fusion supports SLAM in several ways:

- Continuous updates of the spatial and semantic model of the environment based on inputs from various sensors using AI and ML

- Identification of obstacles, thus enabling path planning algorithms to make the needed adjustments and find the most efficient path through the environment

- Implementation of the path plan, requiring real-time control to alter the planned path, including the speed and direction of the AMR, as the environment changes

When SLAM is not enough

SLAM is a vital tool for efficient AMR navigation, but SLAM alone is insufficient. Like pose algorithms, SLAM is implemented with an extended Kalman filter that provides estimated values. SLAM estimated values extend the pose data, adding linear and rotational velocities and linear accelerations among others. SLAM estimation is a two-step process; the initial step involves compiling predictions using internal sensor analytics based on physical laws of motion. The remaining step in SLAM estimation calls for external sensor readings to refine initial estimates. This two-step process helps eliminate and correct small errors that may compile over time and create significant errors.

SLAM depends on the availability of sensor inputs. In some instances, relatively low-cost 2D LiDAR may not work, such as if there are no objects in the direct line of sight of the sensor. In those instances, 3D stereo cameras or 3D LiDAR can improve system performance. However, 3D stereo cameras or 3D LiDAR are more expensive and require more compute power for implementation.

Another alternative is to use a navigation system that integrates SLAM with scan-to-map matching and scan-to-scan matching techniques that can be implemented using only 2D LiDAR sensors (Figure 2):

- Scan-to-map matching uses LiDAR range data to estimate the AMR's position by matching the range measurements to a stored map. The efficacy of this method relies on the accuracy of the map. It does not experience drift over time, but in repetitive environments, it can result in errors that are difficult to identify, cause discontinuous changes in perceived position, and be challenging to eliminate.

- Scan-to-scan matching uses sequential LiDAR range data to estimate the position of an AMR between scans. This method provides updated location and pose information for the AMR independent of any existing map and can be useful during map creation. However, it's an incremental algorithm that can be subject to drift over time with no ability to identify the inaccuracies the drift introduces.

Figure 2: Scan-to-map and scan-to-scan matching algorithms can be used to complement and improve the performance of SLAM systems. (Image source: Aethon)

Figure 2: Scan-to-map and scan-to-scan matching algorithms can be used to complement and improve the performance of SLAM systems. (Image source: Aethon)

Safety needs sensor fusion

Safety is a key concern for AGVs and AMRs, and several standards must be considered. For example, American National Standards Institute / Industrial Truck Standards Development Foundation (ANSI/ITSDF) B56.5 – 2019, Safety Standard for Driverless, Automatic Guided Industrial Vehicles and Automated Functions of Manned Industrial Vehicles, the ANSI / Robotic Industrial Association (RIA) R15.08-1-2020 – Standard for Industrial Mobile Robots – Safety Requirements, several International Standards Organization (ISO) standards, and others.

Safe operation of AGVs and AMRs requires sensor fusion that combines safety-certified 2D LiDAR sensors (sometimes called safety laser scanners) with encoders on the wheels. The 2D LiDAR simultaneously supports two detection distances, can have a 270° sensing angle, and coordinates with the vehicle speed reported by the encoders. When an object is detected in the farther detection zone (up to 20 m away, depending on the sensor), the vehicle can be slowed as needed. If the object enters the closer detection zone in the line of travel, the vehicle stops moving.

Safety laser scanners are often used in sets of 4, with one placed on each corner of the vehicle. They can operate as a single unit and communicate directly with the safety controller on the vehicle. Scanners are available and certified for use in Safety Category 3, Performance Level d (PLd), and Safety Integrity Level 2 (SIL2) applications and are housed in an IP65 enclosure suitable for most outdoor as well as indoor applications (Figure 3). The scanners include an input for incremental encoder information from the wheels to support sensor fusion.

Figure 3: 2D lidar sensors like this can be combined with encoders on the wheels in a sensor fusion system that provides safe operation of AMRs and AGVs. (Image source: Idec)

Figure 3: 2D lidar sensors like this can be combined with encoders on the wheels in a sensor fusion system that provides safe operation of AMRs and AGVs. (Image source: Idec)

Conclusion

Intra logistics supports faster and more efficient supply chains in Industry 4.0 warehouses and factories. AMRs and AGVs are important tools for intra logistics to move material from place to place in a timely and safe manner. Sensor fusion is necessary to support AMR and AGV functions including determining pose, calculating SLAM data, improving navigational performance using scan-to-map matching and scan-to-scan matching, and ensuring safety for personnel and objects throughout the facility.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.