Accelerate Vision Recognition System Design with the Renesas RZ/V2 Series MPUs

Contributed By DigiKey's North American Editors

2022-09-29

As vision recognition at the edge is becoming an increasingly critical feature in many products, machine learning (ML) and artificial intelligence (AI) are finding their way into a wide range of application spaces. The problem developers face is that ML/AI-enabled vision may require more computing power to run the recognition algorithms than may be available in power-constrained applications. It also adds cost if expensive thermal management solutions are required.

The goal for ML/AI at the edge is to find the optimal architectural approach that will balance performance and power, while also providing a robust software ecosystem within which to develop the application.

With these conditions in mind, this article introduces a solution in the form of the Renesas Electronics RZ/V2 Series microprocessor unit (MPU) with its built-in AI hardware accelerator. The article explores how an MPU, rather than a microcontroller (MCU) or high-end graphics processing unit (GPU), can solve several problems designers face. A description of how they can start designing vision recognition systems using the RZ/V2 series is included, along with some “Tips and Tricks” to smooth the process.

Introduction to the RZ/V2 series MPUs

The RZ/V2 series is a solution that unlocks many capabilities for developers using a three-core microprocessor. The RZ/V2L series microprocessors contain two Arm Cortex-A55 processors running at 1.2 gigahertz (GHz), and a real-time microcontroller core (Arm® Cortex®-M33) running at 200 megahertz (MHz). In addition, the parts in the series contain a GPU based on an Arm Mali-G31 multimedia processor with NEON single instruction/multiple data (SIMD) instructions. Combining these three processing cores and the multimedia processor provides a well-rounded solution for developers working on vision recognition systems.

There are currently two MPU classes in the RZ/V2 series, the RZ/V2L and the RZ/V2M series. The RZ/V2L has a simple image signal processor (ISP), 3D graphics engine, and a highly versatile peripheral set. The RZ/V2M, for its part, adds a high-performance ISP that supports 4K resolution at 30 frames per second (fps). This article focuses on the RZ/V2L family, consisting of the R9A07G054L23GBG and the R9A07G054L24GBG. The main difference between the two parts is that the R9A07G054L23GBG comes in a 15 mm2, 456-LFBGA package, while the R9A07G054L24GBG comes in a 21 mm2, 551-LFBGA package.

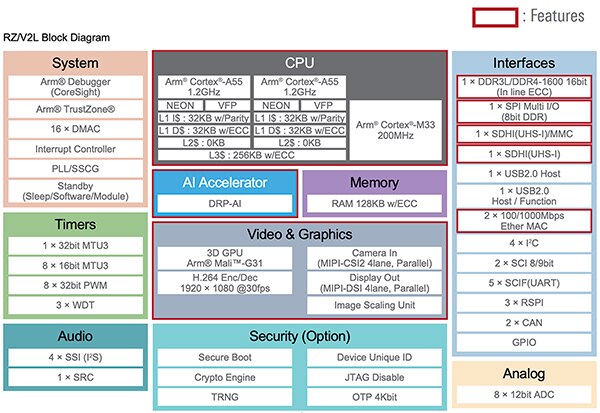

The block diagram for the RZ/V2L series is shown in Figure 1. In addition to the three processing cores, the MPUs include interfaces for standard peripherals like DDR3/DDR4 memory, SPI, USB, Ethernet, I²C, CAN, SCI, GPIO, and an analog-to-digital converter (ADC). Furthermore, the parts include security capabilities such as a secure boot, a crypto engine, and a true random number generator (TRNG). What sets the MPU series apart, though, is the Dynamically Reconfigurable Processor (DRP) AI accelerator.

Figure 1: The RZ/V2L MPU series supports various peripheral interfaces, security, and video processing options. The critical feature for vision recognition applications is the DRP-AI accelerator. (Image source: Renesas Electronics Corporation)

Figure 1: The RZ/V2L MPU series supports various peripheral interfaces, security, and video processing options. The critical feature for vision recognition applications is the DRP-AI accelerator. (Image source: Renesas Electronics Corporation)

The DRP-AI accelerator secret sauce

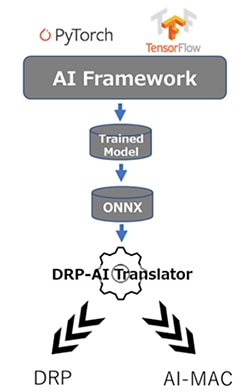

The DRP-AI accelerator is the secret sauce that allows the RZ/V2L series MPUs to execute vision recognition applications quickly, with less energy consumption and a lower thermal profile. The DRP-AI comprises two components: a DRP and an AI-multiply-and-accumulate (MAC), which can efficiently process operations in convolutional networks and all-combining layers by optimizing data flow with internal switches (Figure 2).

The DRP-AI hardware is dedicated to AI inference execution. The DRP-AI uses a unique dynamic reconfigurable technology developed by Renesas that provides flexibility, high-speed processing, and power efficiency. In addition, the DRP-AI translator, a free software tool, lets users implement optimized AI models that quickly maximize performance. Multiple executables output by the DRP-AI translator can be placed in external memory. The application can then dynamically switch between multiple AI models during runtime.

The DRP can quickly process complex activities such as image preprocessing and AI model pooling layers by dynamically changing the hardware configuration.

Figure 2: The DRP-AI comprises a DRP and an AI-MAC, which together can efficiently process operations in convolutional networks and all-combining layers by optimizing data flow with internal switches. (Image source: Renesas Electronics Corporation)

Figure 2: The DRP-AI comprises a DRP and an AI-MAC, which together can efficiently process operations in convolutional networks and all-combining layers by optimizing data flow with internal switches. (Image source: Renesas Electronics Corporation)

The DRP-AI Translator

The DRP-AI Translator tool generates DRP-AI optimized executables from trained ONNX models, independent of any AI framework. For example, a developer could use PyTorch, TensorFlow, or any other AI modeling framework if it outputs an ONNX model. Once the model is trained, it is fed into the DRP-AI Translator, which generates the DRP and AI-MAC executables (Figure 3).

Figure 3: AI models are trained using any ONNX compatible framework. The ONNX model is then fed into the DRP-AI Translator, which generates the DRP and AI-MAC executables. (Image source: Renesas Electronics Corporation)

Figure 3: AI models are trained using any ONNX compatible framework. The ONNX model is then fed into the DRP-AI Translator, which generates the DRP and AI-MAC executables. (Image source: Renesas Electronics Corporation)

The DRP-AI Translator has three primary purposes:

- Scheduling of each operation to process the AI model.

- Hiding overhead such as memory access time that occurs during each operation's transition in the schedule.

- Optimizing the network graph structure.

The Translator automatically allocates each process of the AI model to the AI-MAC and DRP, thus allowing the user to easily use DRP-AI without being a hardware expert. Instead, the developer can make calls through the supplied driver to run the high-performance AI model. In addition, the DRP-AI translator can continuously update to support newly developed AI models without hardware changes.

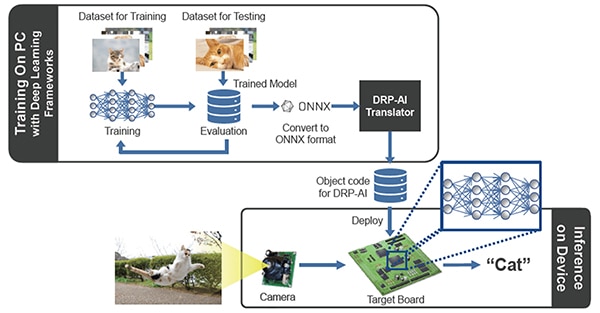

System use cases and processes

The general process flow for using the RZ/V2L MPUs to train and deploy vision recognition applications is shown in Figure 4. As usual, engineers can acquire their dataset and use it to train their vision recognition model. Whether they are trying to identify cats, a product in a shopping cart, or parts failing on an assembly line, the training process will occur using familiar AI frameworks. Once the model is trained, it is converted to ONNX format and fed into the DRP-AI Translator, which in turn outputs object code that can be executed on the DRP-AI hardware. Data from cameras, accelerometers, or other sensors is then sampled and fed into the executables, providing the result of running the inference.

Figure 4: The process of training and running a vision recognition algorithm on the RZ/V2L MPUs. (Image source: Renesas Electronics Corporation)

Figure 4: The process of training and running a vision recognition algorithm on the RZ/V2L MPUs. (Image source: Renesas Electronics Corporation)

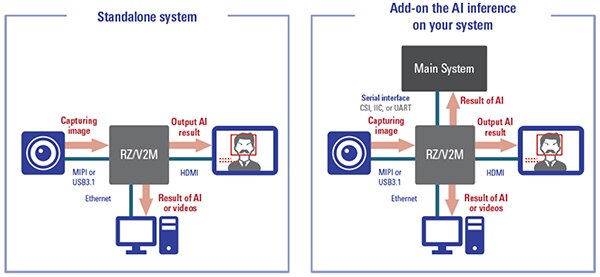

There are several ways that engineers can leverage the RZ/V2L MPUs in their designs (Figure 5). First, the RZ/V2L MPU can be used in standalone designs where the RZ/V2L is the only processor in the system. With its three cores and AI acceleration hardware, additional computing power may not be needed.

The second use case is where the RZ/V2L is used as an AI processor in a more extensive system. In this use case, the RZ/V2L runs the AI inferences and returns a result to another processor or system that then acts on that result. The use case selected will depend on various factors such as cost, overall system architecture, performance, and real-time response requirements.

Figure 5: The two use cases for the RZ/V2L MPUs are to use them standalone in an application, or as an AI processor used in a more extensive system. (Image source: Renesas Electronics Corporation)

Figure 5: The two use cases for the RZ/V2L MPUs are to use them standalone in an application, or as an AI processor used in a more extensive system. (Image source: Renesas Electronics Corporation)

Example real-world application

There are many use cases where vision recognition technology can be deployed. An interesting example is in the supermarket. Today, when checking out at a grocery store, an employee or a shopper typically scans every item in a cart. An interesting use case would be to detect the products that are going across the conveyor using vision recognition and automatically charge for them.

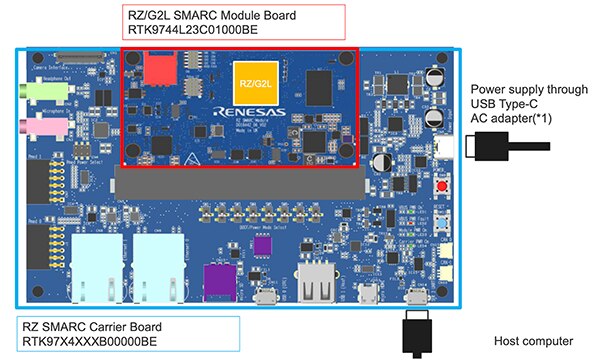

A prototype could be built using a simple CMOS camera and Renesas’s RTK9754L23S01000BE evaluation board (Figure 6). The RZ/V2L embedded development board has a system on module (SOM) and carrier board that allows developers to get up and running quickly. In addition, the development board supports Linux, along with various tools like the DRP-AI Translator.

Figure 6: The RZ/V2L embedded development board has a SOM and carrier board that allows developers to get up and running quickly. (Image source: Renesas Electronics Corporation)

Figure 6: The RZ/V2L embedded development board has a SOM and carrier board that allows developers to get up and running quickly. (Image source: Renesas Electronics Corporation)

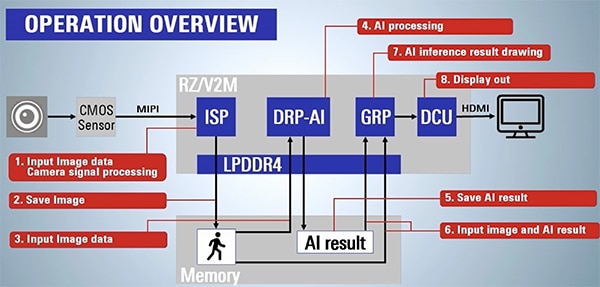

An operational overview of what is required to acquire image data and produce an AI result can be seen in Figure 7. In this application example, images of the conveyor belt are taken using a CMOS sensor through the onboard ISP. Next, the image is saved to memory and fed into the DRP-AI engine. Finally, the DRP-AI engine runs the inference and provides an AI result. For example, the result could be that a banana is found, or an apple, or some other fruit.

The result is often accompanied by a confidence level of 0 to 1. For example, 0.90 confidence means that the AI is confident it has detected an apple. On the other hand, a confidence of 0.52 might mean that the AI thinks it is an apple but is uncertain. It’s not uncommon to take an AI result and average it across multiple samples to improve the chances of correct results.

Figure 7: The RZ/V2L embedded development board is used to run an AI inference that recognizes various fruits on a conveyor belt. The figure demonstrates the steps necessary to acquire an image and produce an AI result. (Image source: Renesas Electronics Corporation)

Figure 7: The RZ/V2L embedded development board is used to run an AI inference that recognizes various fruits on a conveyor belt. The figure demonstrates the steps necessary to acquire an image and produce an AI result. (Image source: Renesas Electronics Corporation)

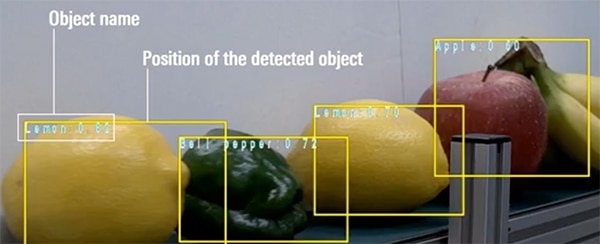

Finally, in this example, a box is drawn around the detected object, and the name of the recognized object is displayed along with the confidence level (Figure 8).

Figure 8: Example output from the RZ/V2L in an application that detects fruits and vegetables on a conveyor belt. (Image source: Renesas Electronics Corporation)

Figure 8: Example output from the RZ/V2L in an application that detects fruits and vegetables on a conveyor belt. (Image source: Renesas Electronics Corporation)

Tips and tricks for getting started with the RZ/V2L

Developers looking to get started with machine learning on the Renesas RZ/V2L MPUs will find that they have a lot of resources to leverage to get up and running. Here are several "tips and tricks" developers should keep in mind that can simplify and speed up their development:

- Start with a development board and the existing examples to get a feel for deploying and running an application.

- If it’s necessary to execute multiple inferences, save the executable models to external memory, and use the DRP-AI capabilities to switch between models quickly.

- Review the documentation and videos found on Renesas’s RZ/V Embedded AI MPUs site.

- Download the DRP-AI Translator.

- Download the RZ/V2L DRP-AI Support Package.

Developers who follow these "tips and tricks" will save quite a bit of time and grief when getting started.

Conclusion

ML and AI are finding their way into many edge applications, with the ability to recognize objects in real-time becoming increasingly important. For designers, the difficulty is finding the right architecture with which to perform AI/ML at the edge. GPUs tend to be power hungry, while MCUs may not have sufficient computing power.

As shown, the Renesas RZ/V MPU series with DRP-AI has several benefits such as hardware accelerated AI, along with a substantial toolchain and prototyping support.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.