How to Run a “Hello World” Machine Learning Model on STM32 Microcontrollers

Contributed By DigiKey's North American Editors

2022-08-18

Machine learning (ML) has been all the rage in server and mobile applications for years, but it has now migrated and become critical on edge devices. Given that edge devices need to be energy efficient, developers need to learn and understand how to deploy ML models to microcontroller-based systems. ML models running on a microcontroller are often referred to as tinyML. Unfortunately, deploying a model to a microcontroller is not a trivial endeavor. Still, it is getting easier, and developers without any specialized training will find that they can do so in a timely manner.

This article explores how embedded developers can get started with ML using STMicroelectronics’ STM32 microcontrollers. To do so, it shows how to create a "Hello World" application by converting a TensorFlow Lite for Microcontrollers model for use in STM32CubeIDE using X-CUBE-AI.

Introduction to tinyML use cases

TinyML is a growing field that brings the power of ML to resource and power-constrained devices like microcontrollers, usually using deep neural networks. These microcontroller devices can then run the ML model and perform valuable work at the edge. There are several use cases where tinyML is now quite interesting.

The first use case, which is seen in many mobile devices and home automation equipment, is keyword spotting. Keyword spotting allows the embedded device to use a microphone to capture speech and detect pretrained keywords. The tinyML model uses a time-series input that represents the speech and converts it to speech features, usually a spectrogram. The spectrogram contains frequency information over time. The spectrogram is then fed into a neural network trained to detect specific words, and the result is a probability that a particular word is detected. Figure 1 shows an example of what this process looks like.

Figure 1: Keyword spotting is an interesting use case for tinyML. The input speech is converted to a spectrogram and then fed into a trained neural network to determine if a pretrained word is present. (Image source: Arm®)

Figure 1: Keyword spotting is an interesting use case for tinyML. The input speech is converted to a spectrogram and then fed into a trained neural network to determine if a pretrained word is present. (Image source: Arm®)

The next use case for tinyML that many embedded developers are interested in is image recognition. The microcontroller captures images from a camera, which are then fed into a pre-trained model. The model can discern what is in the image. For example, one might be able to determine if there is a cat, a dog, a fish, and so forth. A great example of how image recognition is used at the edge is in video doorbells. The video doorbell can often detect if a human is present at the door or whether a package has been left.

One last use case with high popularity is using tinyML for predictive maintenance. Predictive maintenance uses ML to predict equipment states based on abnormality detection, classification algorithms, and predictive models. Again, plenty of applications are available, ranging from HVAC systems to factory floor equipment.

While the above three use cases are currently popular for tinyML, there are undoubtedly many potential use cases that developers can find. Here’s a quick list:

- Gesture classification

- Anomaly detection

- Analog meter reader

- Guidance and control (GNC)

- Package detection

No matter the use case, the best way to start getting familiar with tinyML is with a “Hello World” application, which helps developers learn and understand the basic process they will follow to get a minimal system up and running. There are five necessary steps to run a tinyML model on an STM32 microcontroller:

- Capture data

- Label data

- Train the neural network

- Convert the model

- Run the model on the microcontroller

Capturing, labeling, and training a “Hello World” model

Developers generally have many options available for how they will capture and label the data needed to train their model. First, there are a lot of online training databases. Developers can search for data that someone has collected and labeled. For example, for basic image detection, there’s CIFAR-10 or ImageNet. To train a model to detect smiles in photos, there's an image collection for that too. Online data repositories are clearly a great place to start.

If the required data hasn't already been made publicly available on the Internet, then another option is for developers to generate their own data. Matlab or some other tool can be used to generate the datasets. If automatic data generation is not an option, it can be done manually. Finally, if this all seems too time-consuming, there are some datasets available for purchase, also on the Internet. Collecting the data is often the most exciting and interesting option, but it is also the most work.

The “Hello World” example being explored here shows how to train a model to generate a sine wave and deploy it to an STM32. The example was put together by Pete Warden and Daniel Situnayake as part of their work at Google on TensorFlow Lite for Microcontrollers. This makes the job easier because they have put together a simple, public tutorial on capturing, labeling, and training the model. It can be found on Github here; once there, developers should click the "Run in Google Colab" button. Google Colab, short for Google Collaboratory, allows developers to write and execute Python in their browser with zero configuration and provides free access to Google GPUs.

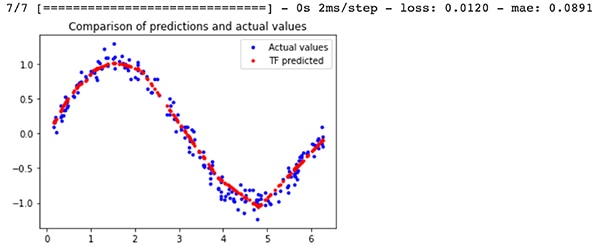

The output from walking through the training example will include two different model files; a model.tflite TensorFlow model that is quantized for microcontrollers and a model_no_quant.tflite model that is not quantized. The quantization indicates how the model activations and bias are stored numerically. The quantized version produces a smaller model that is more suited to a microcontroller. For those curious readers, the trained model results versus actual sine wave results can be seen in Figure 2. The output of the model is in red. The sine wave output isn't perfect, but it works well enough for a “Hello World” program.

Figure 2: A comparison between TensorFlow model predictions for a sine wave versus the actual values. (Image source: Beningo Embedded Group)

Figure 2: A comparison between TensorFlow model predictions for a sine wave versus the actual values. (Image source: Beningo Embedded Group)

Selecting a development board

Before looking at how to convert the TensorFlow model to run on a microcontroller, a microcontroller needs to be selected for deployment in the model. This article will focus on STM32 microcontrollers because STMicroelectronics has many tinyML/ML tools that work well for converting and running models. In addition, STMicroelectronics has a wide variety of parts compatible with their ML tools (Figure 3).

Figure 3: Shown are the microcontrollers and the microprocessor unit (MPU) currently supported by the STMicroelectronics AI ecosystem. (Image source: STMicroelectronics)

Figure 3: Shown are the microcontrollers and the microprocessor unit (MPU) currently supported by the STMicroelectronics AI ecosystem. (Image source: STMicroelectronics)

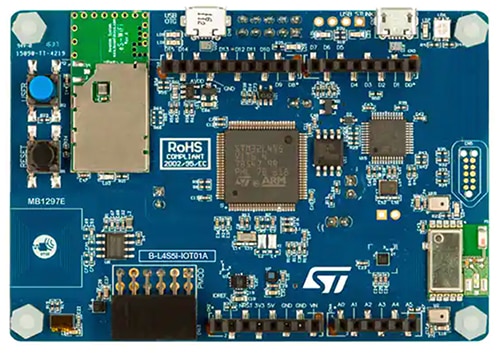

If one of these boards are lying around the office, it's perfect for getting the “Hello World” application up and running. However, for those interested in going beyond this example and getting into gesture control or keyword spotting, opt for the STM32 B-L4S5I-IOT01A Discovery IoT Node (Figure 4).

This board has an Arm Cortex®-M4 processor based on the STM32L4+ series. The processor has 2 megabytes (Mbytes) of flash memory and 640 kilobytes (Kbytes) of RAM, providing plenty of space for tinyML models. The module is adaptable for tinyML use case experiments because it also has STMicroelectronics’ MP34DT01 microelectromechanical systems (MEMS) microphone that can be used for keyword spotting application development. In addition, the onboard LIS3MDLTR three-axis accelerometer, also from STMicroelectronics, can be used for tinyML-based gesture detection.

Figure 4: The STM32 B-L4S5I-IOT01A Discovery IoT Node is an adaptable experimentation platform for tinyML due to its onboard Arm Cortex-M4 processor, MEMS microphone, and three-axis accelerometer. (Image source: STMicroelectronics)

Figure 4: The STM32 B-L4S5I-IOT01A Discovery IoT Node is an adaptable experimentation platform for tinyML due to its onboard Arm Cortex-M4 processor, MEMS microphone, and three-axis accelerometer. (Image source: STMicroelectronics)

Converting and running the TensorFlow Lite model using STM32Cube.AI

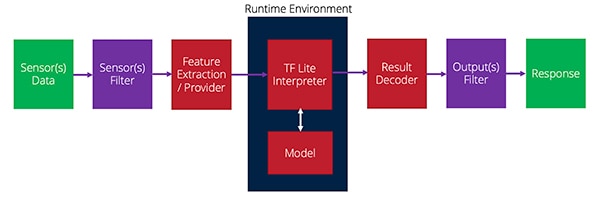

Armed with a development board that can be used to run the tinyML model, developers can now start to convert the TensorFlow Lite model into something that can run on the microcontroller. The TensorFlow Lite model can run directly on the microcontroller, but it needs a runtime environment to process it.

When the model is run, a series of functions need to be performed. These functions start with collecting the sensor data, then filtering it, extracting the necessary features, and feeding it to the model. The model will spit out a result which can then be further filtered, and then—usually—some action is taken. Figure 5 provides an overview of what this process looks like.

Figure 5: How data flows from sensors to the runtime and then to the output in a tinyML application. (Image source: Beningo Embedded Group)

Figure 5: How data flows from sensors to the runtime and then to the output in a tinyML application. (Image source: Beningo Embedded Group)

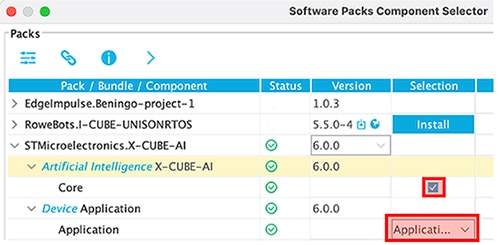

The X-CUBE-AI plug-in to STM32CubeMx provides the runtime environment to interpret the TensorFlow Lite model and offers alternative runtimes and conversion tools that developers can leverage. The X-CUBE-AI plug-in is not enabled by default in a project. However, after creating a new project and initializing the board, under Software Packs-> Select Components, there is an option to enable the AI runtime. There are several options here; make sure that the Application template is used for this example, as shown in Figure 6.

Figure 6: The X-CUBE-AI plug-in needs to be enabled using the application template for this example. (Image source: Beningo Embedded Group)

Figure 6: The X-CUBE-AI plug-in needs to be enabled using the application template for this example. (Image source: Beningo Embedded Group)

Once X-CUBE-AI is enabled, an STMicroelectronics X-CUBE-AI category will appear in the toolchain. Clicking on the category will give the developer the ability to select the model file they created and set the model parameters, as shown in Figure 7. An analyze button will also analyze the model and provide developers with RAM, ROM, and execution cycle information. It’s highly recommended that developers compare the Keras and TFLite model options. On the sine wave model example, which is small, there won't be a huge difference, but it is noticeable. The project can then be generated by clicking “Generate code”.

Figure 7: The analyze button will provide developers with RAM, ROM, and execution cycle information. (Image source: Beningo Embedded Group)

Figure 7: The analyze button will provide developers with RAM, ROM, and execution cycle information. (Image source: Beningo Embedded Group)

The code generator will initialize the project and build in the runtime environment for the tinyML model. However, by default, nothing is feeding the model. Developers need to add code to provide the model input values—x values—which the model will then interpret and use to generate the sine y values. A few pieces of code need to be added to the acquire_and_process_data and post_process functions, as shown in Figure 8.

Figure 8: The code shown will connect fake input sensor values to the sine wave model. (Image source: Beningo Embedded Group)

Figure 8: The code shown will connect fake input sensor values to the sine wave model. (Image source: Beningo Embedded Group)

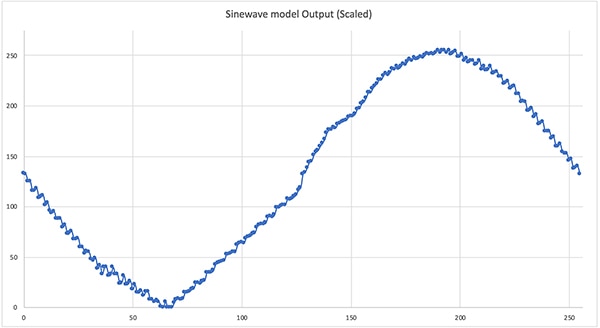

At this point, the example is now ready to run. Note: add some printf statements to get the model output for quick verification. A fast compile and deployment results in the “Hello World” tinyML model running. Pulling the model output for a full cycle results in the sine wave shown in Figure 9. It's not perfect, but it is excellent for a first tinyML application. From here, developers could tie the output to a pulse width modulator (PWM) and generate the sine wave.

Figure 9: The “Hello World” sine wave model output when running on the STM32. (Image source: Beningo Embedded Group)

Figure 9: The “Hello World” sine wave model output when running on the STM32. (Image source: Beningo Embedded Group)

Tips and tricks for ML on embedded systems

Developers looking to get started with ML on microcontroller-based systems will have quite a bit on their plate to get their first tinyML application up and running. However, there are several "tips and tricks" to keep in mind that can simplify and speed up their development:

- Walk through the TensorFlow Lite for microcontrollers “Hello World” example, including the Google Colab file. Take some time to adjust parameters and understand how they affect the trained model.

- Use quantized models for microcontroller applications. The quantized model is compressed to work with uint8_t rather than 32-bit floating-point numbers. As a result, the model will be smaller and execute faster.

- Explore the additional examples in the TensorFlow Lite for Microcontrollers repository. Other examples include gesture detection and keyword detection.

- Take the “Hello World” example by connecting the model output to a PWM and a low-pass filter to see the resultant sine wave. Experiment with the runtime to increase and decrease the sine wave frequency.

- Select a development board that includes "extra" sensors that will allow for a wide range of ML applications to be tried.

- As much fun as collecting data can be, it's generally easier to purchase or use an open-source database to train the model.

Developers who follow these "tips and tricks" will save quite a bit of time and grief when securing their application.

Conclusion

ML has come to the network edge, and resource-constrained microcontroller-based systems are a prime target. The latest tools allow ML models to be converted and optimized to run on real-time systems. As shown, getting a model up and running on an STM32 development board is relatively easy, despite the complexities involved. While the discussion examined a simple model that generates a sine wave, far more complex models like gesture detection and keyword spotting are possible.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.