How to Rapidly Design and Deploy Smart Machine Vision Systems

Contributed By DigiKey's North American Editors

2022-08-31

The need for machine vision is growing across a range of applications, including security, traffic and city cameras, retail analytics, automated inspection, process control, and vision-guided robotics. Machine vision is complex to implement and requires the integration of diverse technologies and sub-systems, including high-performance hardware and advanced artificial intelligence/machine learning (AI/ML) software. It begins with optimizing the video capture technology and vision I/O to meet the application needs and extends to multiple image processing pipelines for efficient connectivity. It is ultimately dependent on enabling the embedded-vision system to perform vision-based analytics in real time using high-performance hardware such as field programmable gate arrays (FPGAs), systems on modules (SOMs), systems on chips (SoCs), and even multi-processor systems on chips (MPSoCs) to run the needed AI/ML image processing and recognition software. This can be a complex, costly, and time-consuming process that is exposed to numerous opportunities for cost overruns and schedule delays.

Instead of starting from scratch, designers can turn to a well-curated, high-performance development platform that speeds time to market, controls costs, and reduces development risks while supporting high degrees of application flexibility and performance. A SOM-based development platform can provide an integrated hardware and software environment, enabling developers to focus on application customization and save up to nine months of development time. In addition to the development environment, the same SOM architecture is available in production-optimized configurations for commercial and industrial environments, enhancing application reliability and quality, further reducing risks, and speeding up time to market.

This article begins by reviewing the challenges associated with the development of high-performance machine vision systems, then presents the comprehensive development environment offered by the Kria KV260 vision AI starter kit from AMD Xilinx, and closes with examples of production-ready SOMs based on the Kira 26 platform designed to be plugged into a carrier card with solution-specific peripherals.

It begins with data type optimization

The needs of deep learning algorithms are evolving. Not every application needs high-precision calculations. Lower precision data types such as INT8, or custom data formats, are being used. GPU-based systems can be challenged with trying to modify architectures optimized for high-precision data to accommodate lower-precision data formats efficiently. The Kria K26 SOM is reconfigurable, enabling it to support a wide range of data types from FP32 to INT8 and others. Reconfigurability also results in lower overall energy consumption. For example, operations optimized for INT8 consume an order of magnitude less energy compared with an FP32 operation (Figure 1).

Figure 1: An order of magnitude less energy is needed for INT8 (8b Add) operations compared with FP32 operations (32b Add). (Image source: AMD Xilinx)

Figure 1: An order of magnitude less energy is needed for INT8 (8b Add) operations compared with FP32 operations (32b Add). (Image source: AMD Xilinx)

Optimal architecture for minimal power consumption

Designs implemented based on a multicore GPU or CPU architecture can be power-hungry based on typical power usage patterns:

- 30% for the cores

- 30% for the internal memory (L1, L2, L3)

- 40% for the external memory (such as DDR)

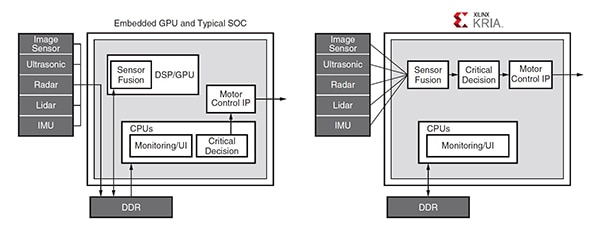

Frequent accesses to inefficient DDR memory are required by GPUs to support programmability and can be a bottleneck to high bandwidth computing demands. The Zynq MPSoC architecture used in the Kria K26 SOM supports the development of applications with little or no access to external memory. For example, in a typical automotive application, communication between the GPU and various modules requires multiple accesses to external DDR memory, while the Zynq MPSoC-based solution incorporates a pipeline designed to avoid most DDR accesses (Figure 2).

Figure 2: In this typical automotive application, the GPU requires multiple accesses to DDR for communication between the various modules (left), while the pipeline architecture of the Zynq MPSoC (right) avoids most DDR accesses. (Image source: AMD Xilinx)

Figure 2: In this typical automotive application, the GPU requires multiple accesses to DDR for communication between the various modules (left), while the pipeline architecture of the Zynq MPSoC (right) avoids most DDR accesses. (Image source: AMD Xilinx)

Pruning leverages the advantages

The performance of neural networks on the K26 SOM can be enhanced using an AI optimization tool that enables data optimization and pruning. It’s very common for neural networks to be over-parameterized, leading to high levels of redundancy that can be optimized using data pruning and model compression. Using Xilinx’s AI Optimizer can result in a 50x reduction in model complexity, with a nominal impact on model accuracy. For example, a single-shot detector (SSD) plus a VGG convolution neural net (CNN) architecture with 117 Giga Operations (Gops) was refined over 11 iterations of pruning using the AI Optimizer. Before optimization, the model ran 18 frames per second (FPS) on a Zynq UltraScale+ MPSoC. After 11 iterations—the 12th run of the model—the complexity was reduced from 117 Gops to 11.6 Gops (10X), performance increased from 18 to 103 FPS (5X), and accuracy dropped from 61.55 mean average precision (mAP) for object detection to 60.4 mAP (only 1% lower) (Figure 3).

Figure 3: After a relatively few iterations, pruning can reduce model complexity (Gop) by 10X and improve performance (FPS) by 5X, with only a 1% reduction in accuracy (mAP). (Image source: AMD Xilinx)

Figure 3: After a relatively few iterations, pruning can reduce model complexity (Gop) by 10X and improve performance (FPS) by 5X, with only a 1% reduction in accuracy (mAP). (Image source: AMD Xilinx)

Real-world application example

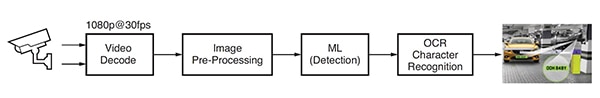

A machine learning application for automobile license plate detection and recognition, also called auto number plate recognition (ANPR), was developed based on vision analytics software from Uncanny Vision. ANPR is used in automated toll systems, highway monitoring, secure gate and parking access, and other applications. This ANPR application includes an AI-based pipeline that decodes the video and preprocesses the image, followed by ML detection and OCR character recognition (Figure 4).

Figure 4: Typical image processing flow for an AI-based ANPR application. (Image source: AMD Xilinx)

Figure 4: Typical image processing flow for an AI-based ANPR application. (Image source: AMD Xilinx)

Implementing ANPR requires one or more H.264 or H.265 encoded real-time streaming protocol (RTSP) feeds that are decoded or uncompressed. The decoded video frames are scaled, cropped, color space converted, and normalized (pre-processed), then sent to the ML detection algorithm. High-performance ANPR implementations require a multi-stage AI pipeline. The first stage detects and localizes the vehicle in the image, creating the region of interest (ROI). At the same time, other algorithms optimize the image quality for subsequent use by the OCR character recognition algorithm and track the vehicle's motion across multiple frames. The vehicle ROI is further cropped to generate the number plate ROI processed by the OCR algorithm to determine the characters in the number plate. Compared with other commercial SOMs based on GPUs or CPUs, Uncanny Vision’s ANPR application ran 2-3X faster on the Kira KV260 SOM, costing less than $100 per RTSP feed.

Smart vision development environment

Designers of smart vision applications like traffic and city cameras, retail analytics, security, industrial automation, and robotics can turn to the Kria K26 SOM AI Starter development environment. This environment is built using the Zynq® UltraScale+™ MPSoC architecture and has a growing library of curated application software packages (Figure 5). The AI Starter SOM includes a quad-core Arm Cortex-A53 processor, over 250 thousand logic cells, and an H.264/265 video codec. The SOM also has 4 GB of DDR4 memory, 245 IOs, and 1.4 tera-ops of AI compute to support the creation of high-performance vision AI applications offering more than 3X higher performance at lower latency and power compared with other hardware approaches. The pre-built applications enable initial designs to run in less than an hour.

Figure 5: The Kria KV260 vision AI starter kit is a comprehensive development environment for machine vision applications. (Image source: AMD Xilinx)

Figure 5: The Kria KV260 vision AI starter kit is a comprehensive development environment for machine vision applications. (Image source: AMD Xilinx)

To help jump-start the development process using the Kria K26 SOM, AMD Xilinx offers the KV260 vision AI starter kit that includes a power adapter, Ethernet cable, microSD card, USB cable, HDMI cable, and a camera module (Figure 6). If the entire starter kit is not required, developers can simply purchase the optional power adapter to start using the Kira K26 SOM.

Figure 6: The KV260 vision AI starter kit includes: (top row, left to right) power supply, Ethernet cable, microSD card, and (bottom row, left to right) USB cable, HDMI cable, camera module. (Image: AMD Xilinx)

Figure 6: The KV260 vision AI starter kit includes: (top row, left to right) power supply, Ethernet cable, microSD card, and (bottom row, left to right) USB cable, HDMI cable, camera module. (Image: AMD Xilinx)

Another factor that speeds development is the comprehensive array of features, including abundant 1.8 V, 3.3 V single-ended, and differential I/Os with four 6 Gb/s transceivers and four 12.5 Gb/s transceivers. These features enable the development of applications with higher numbers of image sensors per SOM and many variations of sensor interfaces such as MIPI, LVDS, SLVS, and SLVS-EC, which are not always supported by application-specific standard products (ASSPs) or GPUs. Developers can also implement DisplayPort, HDMI, PCIe, USB2.0/3.0, and user-defined standards with the embedded programmable logic.

Finally, the development of AI applications has been simplified and made more accessible by coupling the extensive hardware capabilities and software environment of the K26 SOM with production-ready vision applications. These vision applications can be implemented with no FPGA hardware design required and enable software developers to quickly integrate custom AI models and application code and even modify the vision pipeline. The Vitis unified software development platform and libraries from Xilinx support common design environments, such as TensorFlow, Pytorch, and Café frameworks, as well as multiple programming languages including C, C++, OpenCL™, and Python. There is also an embedded app store for edge applications using Kria SOMs from Xilinx and its ecosystem partners. Xilinx offerings are free and open source and include smart camera tracking and face detection, natural language processing with smart vision, and more.

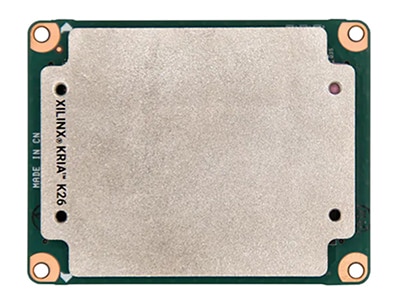

Production optimized Kira 26 SOMs

Once the development process has been completed, production-ready versions of the K26 SOM designed to be plugged into a carrier card with solution-specific peripherals that can speed the transition into manufacturing (Figure 7) are available. The basic K26 SOM is a commercial-grade unit with a temperature rating of 0°C to +85°C junction temperature, as measured by the internal temperature sensor. An industrial-grade version of the K26 SOM rated for operation from -40°C to +100°C, is also available.

The industrial market demands long operational life in harsh environments. The industrial-grade Kria SOM is designed for ten years of operation at 100°C junction and 80% relative humidity and to withstand up to 40 g of shock, and 5 g root mean square (RMS) of vibration. It also comes with a minimum production availability of ten years to support long product lifecycles.

Figure 7: Production-optimized Kira 26 SOMs for industrial and commercial environments are designed to be plugged into a carrier card with solution-specific peripherals. (Image: DigiKey)

Figure 7: Production-optimized Kira 26 SOMs for industrial and commercial environments are designed to be plugged into a carrier card with solution-specific peripherals. (Image: DigiKey)

Summary

Designers of machine vision applications such as security, traffic, and city cameras, retail analytics, automated inspection, process control, and vision-guided robotics can turn to the Kria K26 SOM AI Starter to speed time to market, help to control costs and reduce development risks. This SOM-based development platform is an integrated hardware and software environment, enabling developers to focus on application customization and save up to nine months of development time. The same SOM architecture is available in production-optimized configurations for commercial and industrial environments, further speeding time to market. The industrial version has a minimum production availability of 10 years to support long product lifecycles.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.