Comparing Low-Power Wireless Technologies (Part 1)

Contributed By DigiKey's North American Editors

2017-10-26

Editor’s Note: Part 1 of this three-part series will detail the major low-power wireless options available to designers. Part 2 will consider the design fundamentals of each technology such as chip availability, protocol stacks, application software, design tools, antenna requirements, and power consumption/battery life. Part 3 of the series considers current and future developments designed to meet the challenges of the IoT for each technology. It will also include an introduction to some newer interfaces and protocols, such as Wi-Fi HaLow and Thread.

Recent developments have focused to a large extent on the Internet of Things (IoT) connectivity where sensors gather and communicate signals and data. End product examples are varied, ranging from smartphones, health and fitness wearables (Figure 1), and home automation, to smart meters and industrial control. All have design constraints that include ultra-low power consumption, low cost, and small physical size.

This feature will discuss and contrast the main low-power wireless options. It will discuss the fundamentals of each technology and its key operating attributes, such as frequency band(s), network topology support, throughput, range, and coexistence. Sample solutions will be included.

Figure 1: Wearables are a key market sector for low-power wireless technologies. (Image source: Nordic Semiconductor)

Low-power trade-offs

Engineers now have many choices when it comes to low-power wireless technologies, including RF-based technologies such as Bluetooth low energy, ANT, ZigBee, RF4CE, NFC, Nike+, and Wi-Fi, plus infrared options championed by the Infrared Data Association (IrDA).

But this wide choice makes the selection process more difficult. Each technology makes trade-offs between power consumption, bandwidth, and range. Some are based on open standards while others remain proprietary. To complicate things even further, new wireless interfaces and protocols continue to emerge to address the needs of the IoT. One of these is Bluetooth low energy.

An introduction to Bluetooth low energy

Bluetooth low energy started life as a project in the Nokia Research Centre under the name Wibree. In 2007, the technology was adopted by the Bluetooth Special Interest Group (SIG), which introduced the technology as an ultra-low power consumption form of Bluetooth when it introduced version 4.0 (v4.0) in 2010.

The technology extended the Bluetooth ecosystem to applications with small battery capacities, such as wearables. Featuring microamp average current in target applications, it complements ‘classic’ Bluetooth popular in smartphones, audio headsets, and wireless desktops.

The technology operates in the 2.4 GHz Industrial, Scientific, and Medical (ISM) band and is suited to the transmission of data from compact wireless sensors or other peripherals where fully asynchronous communication can be used. These devices send low volumes of data (i.e. a few bytes) infrequently. Their duty cycle tends to range from a few times per second to once every minute, or longer.

From Bluetooth v4.0, the Bluetooth Core Specification defines two types of chip: The Bluetooth low energy chip, and the Bluetooth chip with a modified stack and the Basic Rate (BR)/Enhanced Data Rate (EDR) physical layer (PHY) of previous versions, combined with a Low Energy (LE) PHY (“BR/EDR + LE”) such that it’s interoperable with all versions and chip variants of the standard. Bluetooth low energy chips can interoperate with other Bluetooth low energy chips and Bluetooth chips adhering to Bluetooth v4.0 or later.

In many consumer applications, a Bluetooth low energy chip operates in conjunction with a Bluetooth chip, but thanks to enhancements to the standard introduced in versions 4.1, 4.2 and 5, Bluetooth low energy chips are increasingly being employed as standalone devices.

The recent introduction of the Bluetooth 5 specification has increased Bluetooth low energy’s raw data rate from 1 to 2 Mbit/s and improved range by up to 4x compared with the previous version. Note that maximum throughput and range can’t be achieved simultaneously, it’s a classic trade-off. The Bluetooth SIG has also recently adopted Bluetooth mesh 1.0, which allows the technology to be configured in a mesh network topology, which will be described in more detail in Part 3 of this series.

For a comprehensive overview of Bluetooth low energy, see, “Bluetooth 4.1, 4.2 and 5 Compatible Bluetooth Low Energy SoCs and Tools Meet IoT Challenges (Part 1)”.

What is ANT?

ANT is comparable to Bluetooth low energy in that it is an ultra-low-power wireless protocol and operates in the 2.4 GHz ISM band. Like Bluetooth low energy, it is designed for coin cell powered sensors exhibiting months or years of battery life. The protocol was released in 2004 by Dynastream Innovations, a Canadian company now part of Garmin. Dynastream Innovations doesn’t manufacture silicon, instead designers can get its firmware on 2.4 GHz transceivers from companies such as Nordic Semiconductor, with the nRF51422 SoC, and Texas Instruments (TI). It does, however, also offer a range of fully tested and verified RF modules running the ANT protocol that require little design integration effort and have already passed regulatory certification.

Although ANT is a proprietary RF protocol, interoperability is encouraged through ANT+ Managed Network. ANT+ facilitates interoperability between ANT+ Alliance member devices and the collection, automatic transfer, and tracking of sensor data. Interoperability is ensured by device profiles; any ANT+ device that implements a specific device profile is interoperable with any other ANT+ device implementing the same device profile. New products must pass an ANT+ Certification Test for interoperability. The Certification is managed by the ANT+ Alliance.

ANT and ANT+ were originally targeted for the sports and fitness segment, but more recently the product has been used for applications in the home and industrial automation sectors. The protocol is subject to continuous development, with the most recent announcement being the release of ANT BLAZE, an enterprise targeted mesh technology for high node count IoT applications. (See Part 3.)

What about ZigBee?

ZigBee is a low-power wireless specification that uses a PHY and media access control (MAC) based on IEEE 802.15.4. On top of that it runs a protocol that is controlled by the ZigBee Alliance. The technology was designed for mesh networking (giving it a head start on some competing technologies) in the industrial and home automation sectors.

ZigBee operates in the 2.4 GHz ISM band as well as 784 MHz in China, 868 MHz in Europe, and 915 MHz in the USA and Australia. Data rates vary from 20 Kbits/s (868 MHz band) to 250 Kbits/s (2.4 GHz band). ZigBee uses 16 x 2 MHz channels separated by 5 MHz, thus is somewhat spectrum inefficient because of the unused allocation.

ZigBee PRO, released in 2007, provides additional features required for robust deployments including enhanced security. The ZigBee Alliance has just announced the availability of ZigBee PRO 2017, a mesh network capable of operating in the 2.4 GHz and 800 - 900 MHz ISM frequency bands simultaneously. (See Part 3 of this series for more.)

Does RF4CE tick all the boxes?

Radio Frequency for Consumer Electronics (RF4CE) is based on ZigBee, but with a protocol customized for the requirements of RF remote control. RF4CE was standardized in 2009 by four consumer electronics companies: Sony, Philips, Panasonic, and Samsung. The technology is supported by several silicon vendors including Microchip, Silicon Labs, and Texas Instruments. RF4CE's intended use is as a device remote control system, for example for television set-top boxes. The technology uses RF to overcome the interoperability, line-of-sight, and limited feature drawbacks of infrared (IR) remote control.

Recently, RF4CE has faced tough competition from both Bluetooth low energy and ZigBee for remote control applications.

How does Wi-Fi compare?

Wi-Fi, based on IEEE 802.11, is a very efficient wireless technology; however, it is optimized for large data transfers using high-speed throughput, rather than low power consumption. As such, Wi-Fi is unsuitable for low power (coin cell) operation. In recent years, improvements have been made to improve power consumption, including amendments such as IEEE Standard 802.11v (specifying configuration of client devices while connected to wireless networks).

IEEE 802.11ah (Wi-Fi “HaLow”), published in 2017, operates in the 90 MHz ISM band, and benefits from lower energy consumption and extended range compared to versions of Wi-Fi operating in the 2.4 and 5 GHz bands. (See Part 3.)

Is NIKE+ an option?

Nike+ is a proprietary wireless technology developed by sportswear manufacturer Nike and targeting the fitness market. It is primarily designed to link a Nike “foot pod” integrating a 2.4 GHz radio chip with Apple mobile devices which analyze and present the collected data. While still popular among a dedicated group of fitness fans, the hardware is in decline because the new generation of smartphones incorporate the same technology. Nike has dropped its wireless fitness band product to focus on smartphone software apps instead.

The proprietary wireless technology upon which the Nike+ system was based is still in use for products such as wireless mice and keyboards. If interoperability is not a requirement, similar technology, such as Nordic Semiconductor’s nRF24LE1, does offer performance comparable to technologies such as Bluetooth low energy, for example, without the requirement to meet standards compliance.

Doesn't IrDA already solve short-range communication problems?

The Infrared Data Association (IrDA) comprises around 50 companies and has released several IR communication protocols under the IrDA name. IrDA is not an RF-based technology, rather it employs modulated pulses of IR light to transfer information. The key advantages of the technology are built-in security as it’s not RF, very low bit error rate (BER) (improving efficiency), no requirement for regulatory compliance certification, and low cost. The technology is also available in a high-speed version, offering 1 Gb/s transfer rates.

The disadvantage of IR technology is limited range (especially for the high-speed version), its ‘line of sight’ requirement, and lack of bi-directional communication in standard implementations. IrDA is also not particularly power efficient (in terms of power per bit) when compared to radio technologies. For basic remote control applications where cost is a key design parameter, IrDA retains market share, but when enhanced control features are demanded, such as those required for smart TVs, Bluetooth low energy and RF4CE are more often specified by designers.

Where does NFC fit?

Near field communication (NFC) operates in the 13.56 MHz ISM band. At this low frequency, the transmit and receive loop antennas function mainly as the primary and secondary windings of a transformer, respectively. Data transfer is via the magnetic field rather than the accompanying electric field because the latter is less dominant at short distances. NFC transfers data at rates up to 424 Kbits/s. As the name suggests, it is designed for very short range communication operating up to a maximum range of 10 cm. This limitation prevents direct competition with Bluetooth low energy, ZigBee, Wi-Fi, and similar technologies. Manufacturers such as NXP USA provide silicon such as the CLRC66303 NFC Transceiver.

A key advantage is that ‘passive’ NFC devices (for example, payment cards) require no power, only becoming active when within close range of a powered NFC device. NFC has gained widespread acceptance for contactless payment technologies and as method of pairing other wireless technologies such as Bluetooth low energy devices without the danger of “man-in-the-middle” attacks on security. NFC is likely to gain good market share as a technology for niche applications complementing the other wireless technologies discussed here.

Network topologies

Low-power wireless technologies support up to five main network topologies:

Broadcast: A message is sent from a transmitter to any receiver within range. The channel is unidirectional with no acknowledgement that the message has been received.

Peer-to-peer: Two transceivers are linked on a bi-directional channel whereby messages can be acknowledged and data can be transferred both ways.

Star: A central transceiver communicates across bi-directional channels with several peripheral transceivers. The peripheral transceivers can’t directly communicate with each other.

Scanning: A central scanning device remains in receive mode, waiting to pick up a signal from any transmitting device within range. Communication is in one direction.

Mesh: A message can be relayed from one point in a network to any other by hopping across bi-directional channels connecting multiple nodes (typically using the services of nodes with additional functionality such as hubs and relays).

Figure 2a, b, c, d, and e illustrate the network topologies, and Table 1 summarizes which topologies each of the wireless technologies discussed above supports.

Figure 2: Low-power wireless technologies have evolved to support increasingly complex network topologies. (Image source: Texas Instruments)

|

B (Bluetooth low energy), A (ANT), A+ (ANT+), Zi (ZigBee), RF (RF4CE),

Wi (Wi-Fi), Ni (Nike+), Ir (IrDA), NF (NFC)

- Continuous receive mode must be activated for nodes listening for broadcast signals.

- All network traffic stops and power consumption is high.

Table 1: Low-power wireless technology network topology support. (Table source: DigiKey)

Low-power wireless technology performance

Range

The range of a wireless technology is often thought of as being proportional to the power output of the transmitter combined with the RF sensitivity of a receiver measured in decibels (the “link budget”). Higher power transmission and greater sensitivity increase range because of the effective improvement in the signal to noise ratio (SNR). SNR is a measure of the ability of a receiver to correctly extract and decode a signal from the ambient noise. At a threshold SNR, the BER exceeds the radio’s specification and communication fails. A Bluetooth low energy receiver, for example, is designed to tolerate a maximum BER of only around 0.1%.

Maximum power output in the license free 2.4 GHz ISM band is limited by regulatory bodies. Generally, the rules are complex, but they essentially dictate that peak transmit power, measured at the antenna input of a frequency-hopping system with less than 75 but at least 15 hopping frequencies, must be limited to a peak of +21 dBm, with a reduction in output if the isotropic antenna gain is larger than 6 dBi. This allows a maximum equivalent isotropic radiated power (EIRP) of +27 dBm.

In addition to this regulation, low-power wireless technologies include specification restrictions on transmission power to maximize battery life. Much of the power is conserved by limiting the time the radio is in a high-power transmit or receive state, but RF chip makers also conserve energy by limiting their maximum Bluetooth low energy transmit power to typically +4 dBm, and occasionally +8 dBm, well below the +21 dBm limit set by the regulations.

However, transmit power and sensitivity are not the only factors limiting the range of wireless devices. The operating environment (for example, the presence of ceilings and walls), frequency of the RF carrier, design layout, mechanics, and coding schemes all come into play. Range is usually stated for an ‘ideal’ environment, but devices are often used in scenarios where it is severely compromised. For example, 2.4 GHz signals are heavily attenuated by the human body, so a wrist-worn wearable can struggle to transmit to a smartphone carried in a back pocket, even though they may be just a meter or so apart.

This list shows typical ranges that can be expected from ultra-low powered technologies in an unobstructed environment with no interference from other RF or optical sources:

- NFC: 10 cm

- High-speed IrDA: 10 cm

- Nike+: 10 m

- ANT(+): 30 m

- 5 GHz Wi-Fi: 50 m

- ZigBee/RF4CE: 100 m

- Bluetooth low energy: 100 m

- 2.4 GHz Wi-Fi: 150 m

- Bluetooth low energy using Bluetooth 5 extended range capability: 200 to 400 m (depending on forward error correction coding scheme)

Throughput

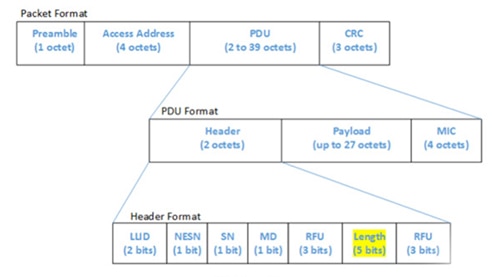

Transmissions by low-power wireless technologies comprise two parts: the bits implementing the protocol (for example, packet ID and length, channel, and checksum, collectively known as the “overhead”) and the information that’s being communicated (known as the “payload”). The ratio of payload/overhead + payload determines the protocol efficiency (Figure 3).

Figure 3: Low-power wireless technology packets (Bluetooth low energy/Bluetooth 4.1 shown here) comprise overhead and payload. Protocol efficiency is determined by the amount of useful data (payload) carried in each packet. (Image source: Bluetooth SIG)

The “raw” data rate (overhead plus payload) is a measure of the number of bits transferred per second and is often the figure quoted in marketing material. The payload data rate will always be lower. (Part 2 of this series will take a closer look at each protocol’s efficiency and its subsequent impact on battery life.)

Low-power wireless technologies generally require the periodic transfer of small amounts of sensor information between sensor nodes and a central device, while minimizing power consumption, so bandwidths are typically modest.

The following list compares raw data and payload throughput for the technologies discussed in this article. (Note that these are theoretical maximums and actual throughput is dependent on configuration and operating conditions):

- Nike+: 2 Mbits/s, 272 bits/s (throughput is limited by design to one packet/s)

- ANT+: 20 Kbits/s (in burst mode – see below), 10 Kbits/s

- NFC: 424 Kbits/s, 106 Kbits/s

- ZigBee – 250 Kbits/s (at 2.4 GHz), 200 Kbits/s

- RF4CE (same as ZigBee)

- Bluetooth low energy – 1 Mbit/s, 305 Kbits/s

- Hi-speed IrDA – raw data 1 Gbit/s, payload 500 Kbits/s

- Bluetooth low energy with Bluetooth 5 high throughput: 2 Mbits/s, 1.4 Mbits/s

- Wi-Fi: 11 Mbits/s (lowest power 802.11b mode), 6 Mbits/s

Latency

The latency of a wireless system can be defined as the time between a signal being transmitted and received. While typically only a matter of milliseconds, it is an important consideration for wireless applications. For example, low latency is not so important for an application automatically polling a sensor for data perhaps once per second, but could become important for a consumer application like a remote control where a user expects imperceptible delay between pressing a button and the subsequent action.

The following list compares latencies for the technologies discussed in this article. (Note that once again that these are dependent on configuration and operating conditions.)

- ANT: Negligible

- Wi-Fi: 1.5 milliseconds (ms)

- Bluetooth low energy: 2.5 ms

- ZigBee: 20 ms

- IrDA: 25 ms

- NFC: polled typically every second (but can be specified by the product manufacturer)

- Nike+: 1 second

Note that the low latencies cited for ANT and Wi-Fi require the receiving device to listen continuously, which quickly consumes battery power. For low-power sensor applications, battery consumption can be improved by increasing the ANT messaging period, at the cost of increased latency.

Robustness and co-existence

Reliable packet transfer has a direct influence on battery life and the user experience. Generally, if a data packet is undeliverable due to suboptimal transmission environments, accidental interference from nearby radios, or deliberate frequency jamming, a transmitter will keep trying until the packet is successfully delivered. This comes at the expense of battery life. Moreover, if a wireless system is restricted to a single transmission channel, its reliability will inevitably deteriorate in congested environments.

The ability of a radio to operate in the presence of other radios is described as coexistence. This is particularly interesting when the radios are operating in the same device, such as Bluetooth low energy and Wi-Fi in a smartphone, with little separation. A standard approach to achieve coexistence between Bluetooth and Wi-Fi is to use an out-of-band signaling scheme, consisting of a wired connection between each IC, coordinating when each is free to transmit or receive. In this article, passive coexistence refers to an interference avoidance system and active coexistence refers to chip-to-chip signaling.

A proven method to assist in passive coexistence is channel hopping. Bluetooth low energy uses frequency-hopping spread spectrum (FHSS), hopping in a pseudo-random pattern between its 37 data channels, to avoid interference. Bluetooth low energy’s so-called adaptive frequency hopping (AFH) enables each node to map frequently congested channels, which are then avoided in future transactions. The latest version of the specification (Bluetooth 5) has introduced an improved channel sequencing algorithm (CSA #2) to improve the pseudo randomness of next hop channel sequencing which improves interference immunity.

ANT supports the use of multiple RF operating frequencies, each 1 MHz wide. Once selected, all communication is conducted on the single frequency, and channel hopping only occurs if significant degradation is experienced on the selected frequency.

To mitigate congestion, ANT uses a time domain multiple access (TDMA) adaptive isochronous scheme to subdivide each 1 MHz frequency band into timeslots of around 7 ms. Paired devices on the channel communicate during these timeslots, which repeat according to the ANT messaging period (for example, every 250 ms or 4 Hz). In practical use, tens or even hundreds of nodes can be accommodated in a single 1 MHz frequency band without clashing. When data integrity is paramount, ANT can use a ‘burst’ messaging technique; this is a multi-message transmission technique that uses the full available bandwidth, and runs to full data transmit completion.

Some of the available ANT RF channels are assigned and regulated by the ANT+ Alliance to maintain network integrity and interoperability, for example, 2.450 and 2.457 GHz. The alliance advises avoiding these channels during normal operation.

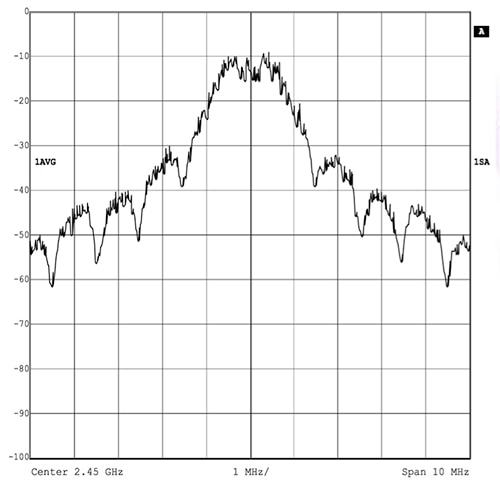

In contrast to Bluetooth low energy’s FHSS technique and ANT’s TDMA scheme, ZigBee (and RF4CE) uses a direct sequence spread spectrum (DSSS) method. During DSSS, the signal is mixed with a pseudo-random code at the transmitter which is then extracted at the receiver. The technique effectively enhances the signal-to-noise ratio by spreading the transmitted signal across a wide band (Figure 4). ZigBee PRO implements an additional technique known as frequency agility, whereby a network node scans for clear spectrum and advises the coordinator so the channel can be used across the network. However, the feature is rarely deployed in practice.

Figure 4: ZigBee attempts to mitigate interference from other 2.4 GHz radios by spreading the transmitted signal across the allocated spectrum. (Image source: Texas Instruments)

Wi-Fi uses eleven 20 MHz channels in the U.S., thirteen in most of the rest of the world, or fourteen in Japan. Consequently, within the confines of the 83 MHz width of the 2.45 GHz spectrum allocation, there is sufficient space for only three non-overlapping Wi-Fi channels (1, 6 and 11). These are therefore used as the default channels. No automatic channel hopping is incorporated, but users can manually switch to an alternative channel if interference proves a problem in operation.

Within the selected channel, Wi-Fi’s interference avoidance mechanism is complex but essentially combines DSSS with orthogonal frequency division multiplex (OFDM). OFDM is a form of transmission that uses many close-spaced carriers with low-rate modulation. Because the signals are transmitted orthogonally, the possibility of mutual interference from close spacing is greatly mitigated.

5 GHz Wi-Fi operates across a 725 MHz wide allocation, allowing for the assignment of many more non-overlapping channels. The result is a significantly reduced chance of interference problems, compared with 2.4 GHz Wi-Fi.

Wi-Fi also employs active coexistence technology, and a mechanism to reduce data rates when interference is detected from other radios.

Such is the ubiquity of Wi-Fi. Other 2.4 GHz technologies include techniques to avoid clashing with the default Wi-Fi channels (1, 6, and 11). Bluetooth low energy’s three advertising channels, for example, are positioned in the gaps between the default Wi-Fi channels (Figure 5).

Figure 5: Bluetooth low energy’s advertising channels are positioned away from Wi-Fi default channels. Note there are an additional seven channels that are clear of potential Wi-Fi interference. (Image source: Nordic Semiconductor)

Nike+ uses a proprietary frequency agile scheme, flipping channels when interference becomes disruptive. This is rarely required because of the technology’s minimal data transfer rate and duty cycle.

IrDA does not implement any form of coexistence technology. However, as a light-based technology, it is only likely to be affected by very bright background light with a significant IR component. The short range and line-of-sight operation make it unlikely that even simultaneously-operating IR devices will interfere with each other.

NFC implements a form of coexistence whereby the reader selects a specific card’s NFC tag from a wallet containing several NFC cards. Because of the short range of transmissions, interference between other NFC devices and/or other radios is rare. However, it is worth noting that the 13.56 MHz band has harmonics in the frequency modulation (FM) band that are particularly strong at 81.3 and 94.9 MHz. These harmonics can potentially cause clicking noises in a co-located FM receiver. The FM interference effects can be reduced by implementing anti-collision techniques, for example, by “skewing” or clean up.

Conclusion

There are many popular low-power wireless technologies. Although each is designed for battery operation and relatively modest data transfer, they have different range, throughput, robustness, and coexistence capabilities. These performance variations suit different applications - albeit with a large degree of overlap.

Introduction to Parts 2 and 3: Performance is only one part of the selection process, so Part 2 will look closer at the design fundamentals of each technology such as chip availability, protocol stacks, application software, design tools, antenna requirements, and power consumption.

Part 3 will consider current and future development designed to meet the challenges of the IoT for each technology, together with an introduction to some newer interfaces and protocols, such as Wi-Fi HaLow and Thread.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.